The Rise of Transformers in Vision and Multimodal Models – Hugging Face – day 72

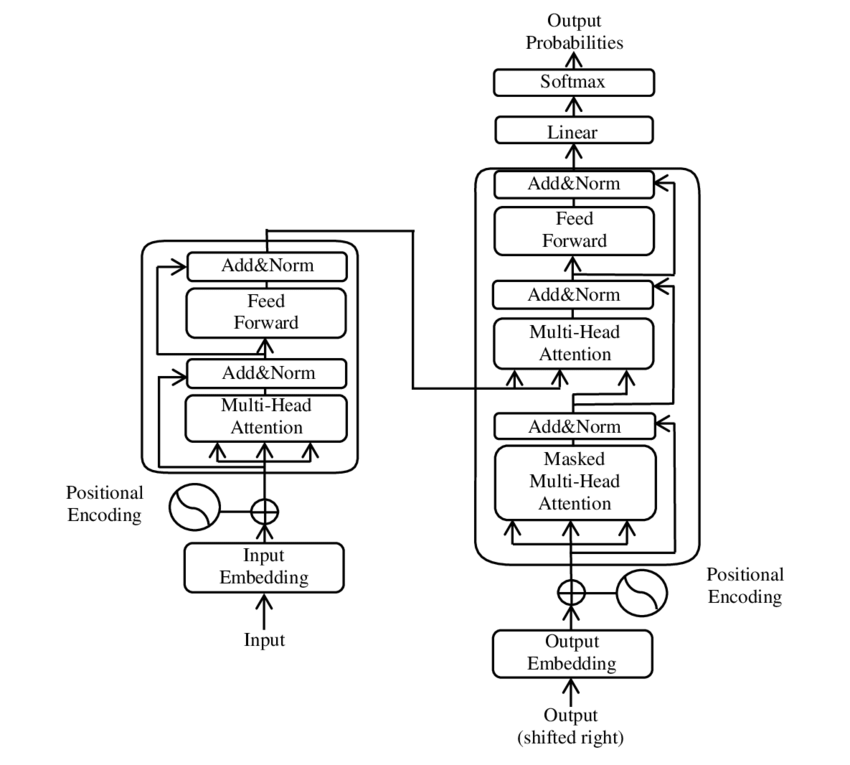

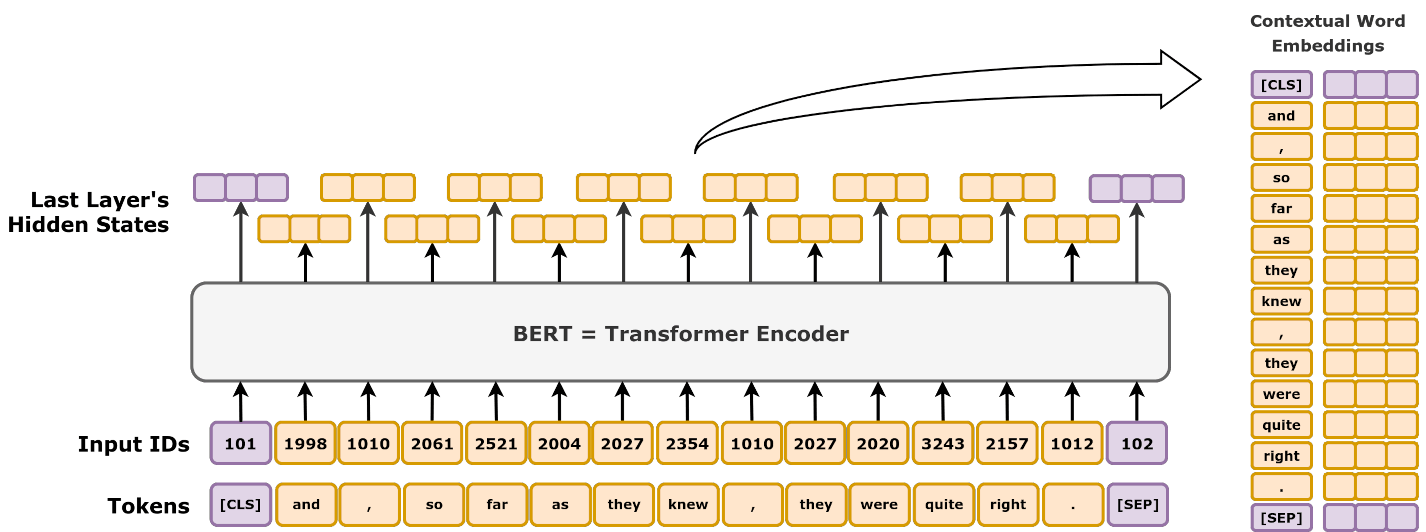

The Rise of Transformers in Vision and Multimodal Models In this first part of our blog series, we’ll explore how transformers, originally created for Natural Language Processing (NLP), have expanded into Computer Vision (CV) and even multimodal tasks, handling text, images, and video in a unified way. This will set the stage for Part 2, where we will dive into using Hugging Face and code examples for practical implementations. 1. The Journey of Transformers from NLP to Vision The introduction of transformers in 2017 revolutionized NLP, but researchers soon realized their potential for tasks beyond just text. Originally used alongside...