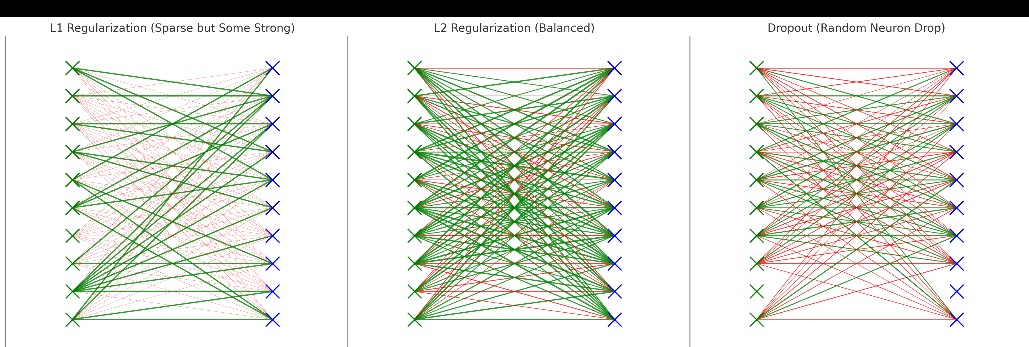

Understanding Regularization in Deep Learning – A Mathematical and Practical Approach Introduction One of the most compelling challenges in machine learning, particularly with deep learning models, is overfitting. This occurs when a model performs exceptionally well on the training data but fails to generalize to unseen data. Regularization offers solutions to this issue by controlling the complexity of the model and preventing it from overfitting. In this post, we’ll explore the different types of regularization techniques—L1, L2, and dropout—diving into their mathematical foundations and practical implementations. What is Overfitting? In machine learning, a model is said to be overfitting when it learns not just the actual patterns in the training data but also the noise and irrelevant details. While this enables the model to perform well on training data, it results in poor performance on new, unseen data. The flexibility of neural networks, with their vast number of parameters, makes them highly prone to overfitting. This flexibility allows them to model very complex relationships in the data, but without precautions, they end up memorizing the training data instead of generalizing from it. Regularization is the key to addressing this challenge. L1 and L2 Regularization: The Mathematical Backbone L1 Regularization (Lasso Regression) L1 regularization works by adding a penalty equal to the absolute value of the weights to the loss function. Mathematically, this is represented as: Where: is the original loss function (e.g., cross-entropy or mean squared error), is a regularization constant that controls the strength of regularization, are the weights of the model. L1 regularization promotes sparsity in the model. This means it forces many of the weights to zero, effectively removing certain features from the model. This can be useful when you expect that only a small subset of features will be significant for your task. Why Use L1? The reason L1 regularization is so useful in certain contexts is because it encourages the model to ignore irrelevant features. By forcing many of the weights to zero, it simplifies the model, making it more interpretable and preventing overfitting. L2 Regularization (Ridge Regression or Weight Decay) L2 regularization penalizes the squared values of the weights rather than the absolute values. The L2 regularized loss function is: While L1 regularization forces some weights to zero, L2 regularization ensures that all the weights are small but not necessarily zero. This helps keep the model smooth and reduces overfitting without eliminating features entirely. Why Use L2? L2 regularization spreads the influence of all the input features more evenly, making it more suitable in cases where you expect all features to have some impact on the output. It’s the more commonly used regularization technique, particularly when the aim is to reduce overfitting without making the model sparse. Dropout: A Different Approach While L1 and L2 regularization modify the loss function, dropout takes a different approach. Introduced by Hinton et al., dropout randomly “drops” a certain percentage of neurons during training. By forcing the network to operate without certain neurons, dropout ensures that individual neurons don’t become too reliant on each other. This promotes robustness in the network and prevents overfitting. Mathematically, dropout can be viewed as randomly setting the outputs of some neurons to zero with a probability . During testing, all neurons are used, but their activations are scaled down by to compensate for the missing neurons during training. Why Use Dropout? Dropout is particularly useful in deep neural networks, where overfitting is a common issue due to the high number of parameters. By introducing randomness, dropout ensures that the network cannot co-adapt too much to the training data, which helps generalize to unseen data. How Regularization Works in Gradient Descent In a typical training scenario, the model updates its weights using gradient descent. Without regularization, the update rule for weight is: Where: is the learning rate, is the gradient of the loss function with respect to . With L2 regularization, the update rule changes to: This extra term penalizes large weights and shrinks them towards zero, effectively controlling the complexity of the model. For L1 regularization, the update rule becomes: Here, is the sign of , which drives the weights toward zero for small values, promoting sparsity. Practical Implementation: Regularization in Keras In practice, implementing these regularization techniques is relatively straightforward in libraries like Keras. For example, applying L2 regularization to a dense layer is as simple as: from tensorflow.keras import regularizers model.add(Dense(64, kernel_regularizer=regularizers.l2(0.01))) Similarly, you can apply dropout like this: model.add(Dropout(0.5)) Here, 50% of the neurons will be dropped during each training iteration, making the network more robust. TO NOTE NOW Regularization is a critical tool for ensuring that deep learning models generalize well to unseen data. Whether it’s promoting sparsity through L1 regularization, shrinking weights through L2 regularization, or introducing randomness through dropout, each method has its strengths and can be used in various scenarios depending on the nature of the data and the model. Incorporating these regularization techniques into your deep learning models can significantly improve their performance on new data, ensuring they don’t just memorize the training set but instead learn meaningful, generalizable patterns. AspectL1 Regularization (Lasso)L2 Regularization (Ridge)DropoutWhen to Use (Crystal Clear Use Case)Penalty TermSum of the absolute values of the weights. Sum of the squares of the weights. No explicit penalty term, but neurons are randomly “dropped” during training, forcing the network to rely on different sets of neurons in each iteration.**How to Know Which One to Use**: L1 Regularization (Lasso): Use when you suspect **many features are irrelevant or redundant**. L1 regularization is best suited for **feature selection** and **sparse models**. If you notice your model has many coefficients that are likely insignificant (especially in high-dimensional data), L1 will automatically drive some weights to zero, effectively eliminating irrelevant features. L2 Regularization (Ridge): Use when you believe **all features contribute**, but you want to prevent overfitting by reducing large weights. L2 regularization ensures all features contribute, but it minimizes the impact of each, controlling the magnitude. In datasets where **multicollinearity** (high correlation between variables) is an issue, L2 helps stabilize the model. Dropout: Use when working with **deep neural networks**, especially in fully connected layers, where **overfitting** is likely due to the high number of parameters. If your model shows signs of overfitting (e.g., the training accuracy is much higher than test accuracy), dropout can force the model to rely less on specific neurons, improving generalization. **Mathematical Proof**: L1: Minimizing the L1 penalty causes some weights to become zero, reducing the complexity of the model. This is seen in the optimization process where the gradient updates are smaller for irrelevant weights, eventually pushing them to zero:. L2: The L2 penalty shrinks weights uniformly without eliminating any. This is effective when you want to reduce large weights but keep all features in the model:. Dropout: By dropping neurons at random during training, the model learns to be more robust, generalizing better across datasets. The randomization forces the model to learn more distributed representations of the data. Effect on WeightsDrives some weights to exactly zero, creating a sparse model by eliminating irrelevant features. Reduces the magnitude of weights, but none are driven to exactly zero. Weights are shrunk by , but they never disappear. Randomly “drops” neurons during training, zeroing their output temporarily to encourage the network to rely on different subsets of neurons.Why Weights Go to Zero or NotWeights go to zero because the penalty is based on the absolute value of the weights. This non-differentiability at zero means that the gradient can easily push small weights to zero, leading to sparsity.Weights do not go to zero because the penalty is based on the square of the weights, which is differentiable everywhere. Weights are reduced in magnitude, but none are eliminated completely.Weights are not permanently changed, but during training, some neurons are temporarily set to zero. This prevents the network from relying too much on any single set of neurons.Computational ComplexityMore complex, as L1 is not differentiable at zero, which can make optimization slower.Less complex because L2 is differentiable everywhere, making optimization smoother.Increases training time as the model needs more iterations to converge due to the random nature of dropout.Example ScenarioIn text classification, where only a small subset of words are predictive, L1 will drive irrelevant word features to zero, creating a sparse and interpretable model.In regression models where multicollinearity is an issue, L2 regularization helps shrink all coefficients, making the model more stable without eliminating any feature.In a deep neural network, dropout helps prevent overfitting by ensuring that the model does not rely too heavily on any one set of neurons, improving generalization.Mathematical ExampleFor a linear regression problem with L1 regularization:This drives irrelevant weights to zero, eliminating unnecessary features.For a linear regression problem with L2 regularization:This shrinks weights but doesn’t drive them to zero, keeping all features.In a neural network with dropout, the output of neurons is zeroed out with probability . This prevents neurons from co-adapting during training. Wonder, Why Do Weights Go to Zero with L1 Regularization but Not with L2 Regularization? Lets do in this part a Numerical Proof with Multiple Iterations to know why In machine learning, understanding why L1 regularization drives some weights to zero while L2 regularization does not is critical when choosing a regularization technique for your model. In this part, we will walk through multiple iterations of weight updates using both L1 and L2 regularization to show how L1 leads to sparsity (weights going to zero) while L2 shrinks weights but keeps them non-zero. Overview of L1 and L2 Regularization L1 Regularization (Lasso): Adds a penalty based on the absolute value of the weights. This often results in some weights being driven to zero, effectively removing irrelevant features and making the model sparse. L2 Regularization (Ridge): Adds a penalty based on the square of the weights. This shrinks weights uniformly but never reduces them to zero, meaning all features continue to contribute to the model. Mathematical Background: Why L1 Drives Weights to Zero and L2 Does Not L1 Regularization The cost function for L1 regularization is: The key term is , the absolute value of the weights. Since the absolute value is non-differentiable at zero, small weights are often pushed all the way to zero during optimization. This leads to sparsity, where irrelevant features are discarded. L2 Regularization The cost function for L2 regularization is: The key term is , the square of the weights. Since the square function is differentiable everywhere, it causes weights to shrink gradually but not reach zero. This maintains the contribution of all features, though their influence is reduced. Numerical Example: Multiple Iterations of L1 and L2 Regularization Let’s walk through a numerical example, applying both L1 and L2 regularization to show how the weights evolve over multiple iterations of gradient descent. Assumptions We have two weights: and . Regularization strength . Learning rate . Assume a simple gradient for both weights. Without Regularization In the absence of regularization, the weights remain unchanged by the regularization penalty. Initial weights: theta_1 = 1.5 theta_2 = 0.5 After one iteration of gradient descent (assuming the gradient ): theta_1 = 1.5 – 0.1 * 1 = 1.4 theta_2 = 0.5 – 0.1 * 1 = 0.4 This continues for more iterations, but the weights are simply reduced by the learning rate multiplied by the gradient without any additional penalty. L1 Regularization (Iteration by Iteration) Let’s see what happens to the weights when L1 regularization is applied over several iterations. Iteration 1 theta_1 = 1.5 – 0.1 * (1 + 0.1 * sign(1.5)) = 1.5 – 0.11 = 1.39 theta_2 = 0.5 – 0.1 * (1 + 0.1 * sign(0.5)) = 0.5 – 0.11 = 0.39 Iteration 2 theta_1 = 1.39 – 0.1 * (1 + 0.1 * sign(1.39)) = 1.39 – 0.11 = 1.28 theta_2 = 0.39 – 0.1 * (1 + 0.1 * sign(0.39)) = 0.39 – 0.11 = 0.28 Iteration 3 theta_1 = 1.28 – 0.1 * (1 + 0.1 * sign(1.28)) = 1.28 – 0.11 = 1.17 theta_2 = 0.28 – 0.1 * (1 + 0.1 * sign(0.28)) = 0.28 – 0.11 = 0.17 Iteration 4 theta_1 = 1.17 – 0.1 * (1 + 0.1 *…

Understanding Regularization in Deep Learning – Day 47