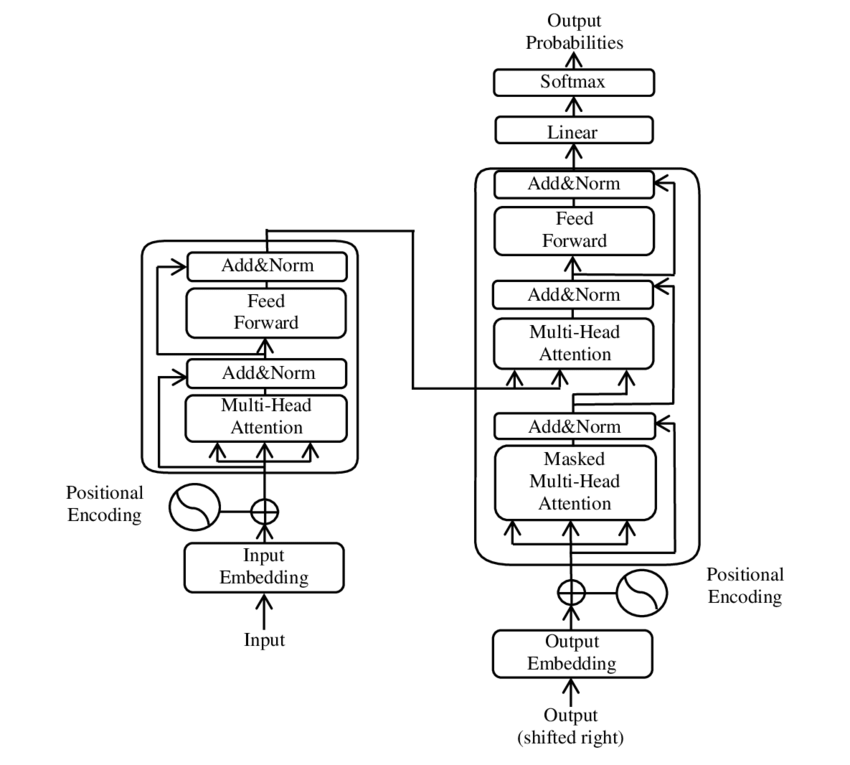

Transformer Models Comparison FeatureBERTGPTBARTDeepSeekFull TransformerUses Encoder?✅ Yes❌ No✅ Yes❌ No✅ YesUses Decoder?❌ No✅ Yes✅ Yes✅ Yes✅ YesTraining ObjectiveMasked Language Modeling (MLM)Autoregressive (Predict Next Word)Denoising AutoencodingMixture-of-Experts (MoE) with Multi-head Latent Attention (MLA)Sequence-to-Sequence (Seq2Seq)Bidirectional?✅ Yes❌ No✅ Yes (Encoder)❌ NoCan be bothApplicationNLP tasks (classification, Q&A, search)Text generation (chatbots, summarization)Text generation and comprehension (summarization, translation)Advanced reasoning tasks (mathematics, coding)Machine translation, speech-to-text Table 1: Comparison of Transformers, RNNs, and CNNs FeatureTransformersRNNsCNNsProcessing ModeParallelSequentialLocalized (convolution)Handles Long DependenciesEfficientStruggles with long sequencesLimited in handling long dependenciesTraining SpeedFast (parallel)Slow (sequential)Medium speed due to parallel convolutionKey ComponentAttention MechanismRecurrence (LSTM/GRU)ConvolutionsNumber of Layers6–24 layers per encoder/decoder1-2 (or more for LSTMs/GRUs)Typically 5-10 layersBackpropagationThrough attention and feed-forward layersBackpropagation Through Time (BPTT)Standard backpropagation Self-Attention Mechanism The self-attention mechanism allows each word in a sequence to attend to every other word, capturing relationships between distant parts of the input. This mechanism is fundamental for understanding long-range dependencies, which RNNs often struggle with due to vanishing gradients. Here’s how self-attention works: Query (Q), Key (K), and Value (V) Vectors: Each word in the input sequence is transformed into Q, K, and V vectors through learned linear transformations. These vectors allow the model to determine how important each word is relative to others. Scaled Dot-Product Attention: Attention scores are calculated as the dot product of Q and K vectors, then scaled by the square root of the dimensionality of the key vectors, and passed through a Softmax to obtain attention weights. Weighted Sum: The attention weights are applied to the Value vectors to form the output. Multi-Head Attention Transformers use multi-head attention, which is an enhancement of self-attention that allows the model to learn multiple representations of the input simultaneously. Each attention “head” uses a different set of learned parameters, providing a variety of perspectives on the input, such as syntactic and semantic relationships. Parallel Attention Heads: Multiple attention heads (typically eight in the original Transformer) process the input in parallel, allowing the model to capture various types of relationships. Aggregation: The results from each head are concatenated and linearly transformed to generate a comprehensive representation of the input. Positional Encoding Since Transformers process inputs in parallel, they need to be informed of the order of words in the sequence. Positional encodings are added to the input embeddings to provide information about each word’s position in the sequence. The original Transformer used a sinusoidal function to encode positions, but recent improvements have introduced learned positional embeddings for even better performance. Table 2: Example of Positional Encoding Values WordPositional EncodingWord EmbeddingFinal Input to Transformer”The”0.001[1.1, 0.9, …][1.101, 0.901, …]”cat”0.002[1.4, 0.6, …][1.402, 0.602, …]”sat”0.003[1.2, 0.7, …][1.203, 0.703, …] Transformer Architecture: Encoder and Decoder The Transformer follows an encoder-decoder structure, consisting of a stack of identical layers with multi-head attention and feed-forward components. The encoder converts the input into an attention-based representation, while the decoder generates the output using this representation. Encoder: Processes the input through multiple layers of self-attention and feed-forward networks. Each layer includes layer normalization and residual connections to stabilize training and allow the gradient to flow efficiently through deep networks. Decoder: Similar to the encoder but includes an additional attention mechanism that allows it to attend to the encoded representations while generating the output sequence. It uses masked attention to ensure that the prediction of each token in the sequence only considers the previous tokens, maintaining the autoregressive nature of the generation process. Applications of Transformers Transformers have found applications across a wide range of NLP tasks, demonstrating their versatility and efficiency. Table 3: Applications of Transformer Models ApplicationTransformer ModelDescriptionMachine TranslationTransformerTranslates between languagesText SummarizationBARTSummarizes long documents into shorter textQuestion AnsweringBERTRetrieves answers based on contextText GenerationGPT-3Generates human-like text based on input prompts Recent advancements also include Vision Transformers (ViTs), which apply the Transformer architecture to image recognition by treating image patches as tokens. Understanding Transformers: The Backbone of Modern NLP Introduction Transformers have significantly transformed the field of Natural Language Processing (NLP). Originally introduced in the 2017 paper “Attention is All You Need” by Vaswani et al., Transformers replaced recurrent (RNN) and convolutional (CNN) architectures with an entirely attention-based system. This new architecture provided faster and more accurate results in tasks like machine translation, text summarization, and beyond. Detailed Comparison of Modern Language Models Feature/ModelTransformersBERTChatGPTLLMsGeminiClaude 2DeepSeekArchitectureEncoder-Decoder (Self-Attention)Encoder (Bidirectional)Decoder (Autoregressive)Based on Transformer architectureTransformer-based, multimodalTransformer-based, multimodalMixture-of-Experts (MoE) with Multi-head Latent Attention (MLA)Developed byVaswani et al. (2017)Google (2018)OpenAIVarious (OpenAI, Google, Meta, etc.)Google DeepMindAnthropicDeepSeek, founded by Liang Wenfeng in 2023Core FunctionalityGeneral sequence modelingText understandingConversational AI, text generationWide-ranging language understandingMultimodal (text, images, audio, video)Text processing, reasoning, conversational AIAdvanced reasoning, coding, and mathematical problem-solvingTraining ApproachSelf-attention across sequencesPretrained using Masked Language ModelAutoregressive: next token predictionPretrained on vast datasetsMultitask & multimodal learningEmphasizes safety, alignment, and large context windowPretrained on diverse datasets; employs reinforcement learning for reasoning capabilitiesContextual HandlingFull sequence attentionBidirectional contextAutoregressive, token length limitedFew-shot/zero-shot capabilitiesUp to 1M tokens context windowUp to 200K tokens context windowSupports context lengths up to 128K tokensStrengthVersatile for diverse NLP tasksAccurate context understandingConversational generationGeneral-purpose adaptabilitySuperior for real-time info and multimodal tasksStrong text handling, safety-firstHigh efficiency and performance in reasoning and coding tasks; open-source accessibilityWeaknessRequires substantial data/compute powerLimited in text generationToken length limits context memoryHigh computational costsOccasional factual inaccuraciesLimited image processing, training biasesPotential censorship concerns; avoids topics sensitive to the Chinese governmentApplicationsTranslation, summarizationText classification, sentiment analysisChatbots, content generationTranslation, summarization, codeCross-modal tasks (video, images, audio)Customer service, legal documentsMathematical reasoning, coding assistance, advanced problem-solvingModel SizeVaries (small to very large)Medium to largeLarge (e.g., GPT-4 Turbo)Extremely large (GPT-4, LLaMA)Nano, Pro, UltraHaiku, Sonnet, Opus (up to 1M tokens)Models like DeepSeek-V3 with 671B total parameters, 37B activated per tokenPricingVaries by implementationFree (e.g., Hugging Face)$20/month for GPT-4Varies (OpenAI, Google)$19.99/month (Pro), more for Advanced$20/month for Claude ProOpen-source; free access to models like DeepSeek-V3Notable FeatureFoundation of modern NLP modelsStrong contextual embeddingsAutoregressive text generationFew-shot/zero-shot adaptabilityUp-to-date web info, multimodal capabilitiesConstitution-based ethics, long-form text coherenceEfficient training with lower computational costs; open-source under MIT licenseBenchmark PerformanceSuitable across NLP benchmarksExcels in MMLU & classificationEffective in conversational tasksLeads in multitask benchmarks (MMLU)Strong in multimodal (DocVQA, TextVQA)Excellent in coding benchmarks (HumanEval)Outperforms models like Llama 3.1 and Qwen 2.5; matches GPT-4o and Claude 3.5 Sonnet in benchmarksExplainabilityModerateClear, especially in embeddingsLimited for complex resultsVaries by use caseWell-integrated with Google DocsConstitution-driven ethics & transparencyOpen-source code promotes transparency; potential concerns over content moderation Key Insights – Gemini offers exceptional multimodal capabilities, including handling text, images, audio, and video, making it ideal for interdisciplinary and technical tasks like research and content…

Thank you for reading this post, don't forget to subscribe!Transformers in Deep Learning: Breakthroughs from ChatGPT to DeepSeek – Day 66