Introduction to Tokenization

Tokenization in NLP is the process of breaking text into smaller units called tokens, such as words, subwords, or characters. It’s a crucial first step in any NLP pipeline because it transforms raw text into discrete elements that algorithms can understand (Tokenization in NLP: Types, Challenges, Examples, Tools – Neptune). For example, a simple whitespace tokenizer would split the sentence “Tokenization is important!” into ["Tokenization", "is", "important!"]. By converting text into tokens, we enable deep learning models to handle and learn from textual data. This step has a significant impact on downstream tasks – a good tokenization strategy can improve model performance, while a poor one can introduce errors (Tokenization Techniques for NLP: A Deep Dive into Essential Methods). In essence, tokenization serves as the bridge between human language and numerical representations that models like neural networks require. It simplifies complex text, handles punctuation and contractions (e.g., splitting “don’t” into “do” and “n’t”), and defines a consistent vocabulary for a model. Without tokenization, training a language model would be infeasible, as the model would have to deal with raw text in all its complexity.

Why is tokenization crucial? Consider that modern NLP models cannot directly ingest raw strings; they operate on numerical inputs. Tokenization assigns each token a unique ID (index) in a vocabulary, allowing text to be converted into sequences of integers. This consistency is vital – a pretrained model only works properly if we feed it text tokenized in the same way as its training data. Moreover, tokenization helps manage vocabulary size. Pure word-level tokenization can lead to an enormous vocabulary (especially for languages with rich morphology or when including misspellings, etc.), whereas character-level tokenization keeps vocabulary small but produces very long sequences. Subword tokenization – which breaks words into pieces (like “unhappy” → “un” + “happy”) – strikes a balance between word-level and character-level approaches. This balance reduces out-of-vocabulary (OOV) issues (the model can still understand a rare or new word by its subwords) and keeps sequence lengths reasonable. In summary, tokenization is foundational in NLP: it affects how effectively a model learns context and handles new words, making it a cornerstone for model performance.

Tokenization in Large Language Models (LLMs)

Modern large language models (LLMs) like ChatGPT, BERT, and LLaMA each rely on their own tokenization schemes to convert text into model-readable tokens. Despite sharing the same goal, these models illustrate different tokenization methods – from Byte Pair Encoding (BPE) to WordPiece to SentencePiece – each with distinct algorithms and nuances.

ChatGPT / GPT-family (Byte-Pair Encoding): Models in the GPT family use variants of BPE for tokenization. For instance, GPT-2 and GPT-3 employ a byte-level BPE tokenizer. Byte-level BPE means that instead of starting with individual Unicode characters, the tokenizer starts with bytes (256 possible byte values) as the base vocabulary. This clever trick ensures every possible character (including emojis, accents, etc.) is covered by the base vocabulary, avoiding “unknown” tokens. The tokenizer then learns to merge common byte sequences into larger tokens. As a result, even uncommon or made-up words can be broken into consistent sub-pieces. For example, GPT tokenizers might split a rare word like “🤖AI!” into tokens like ["�", "AI", "!"] (where the robot emoji might be broken into a placeholder byte and then “AI” and “!” as separate tokens). Using byte-level BPE makes the tokenizer robust to any input – no character is truly out-of-vocabulary. The trade-off is that some tokens may look odd or incomplete to humans, but they are optimized for the model. ChatGPT inherits these properties; it counts input and output in tokens (where one token is roughly 4 characters of English text on average). The BPE approach used by GPT models efficiently balances vocabulary size and text coverage, and it’s one reason these models can handle code, URLs, or novel words gracefully.

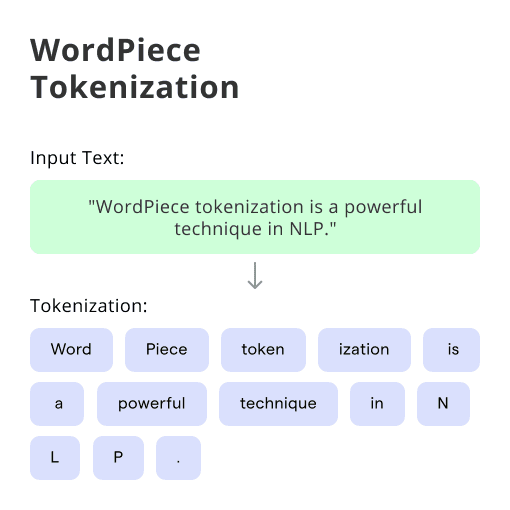

BERT (WordPiece): BERT and its variants (DistilBERT, Electra, etc.) use the WordPiece tokenization algorith】. At first glance WordPiece looks similar to BPE – it also builds a vocabulary of subwords by merging characters – but the selection criterion differs. Instead of merging the most frequent pair of symbols, WordPiece chooses the merge that *maximizes the likelihood of the training corpus】. In practice, this means WordPiece tries to find an optimal trade-off: if merging two tokens greatly improves how well the vocabulary can explain the training text, it will do so, even if that pair isn’t the single most frequent. Intuitively, WordPiece evaluates what information might be lost or gained by each possible merg】. This results in a vocabulary that sometimes handles infrequent but meaningful subwords more gracefully. In BERT’s tokenizer, common words stay intact (“playing” stays “playing”), while rare or complex words are broken down (e.g., “sailing🏄” → “sailing” + “##🏄”; here “##” indicates the second token is a continuation of the prior word). The double-hash prefix is a convention in WordPiece to mark tokens that aren’t the start of a word. This helps preserve word boundaries when the text is reconstructed. *Advantages of WordPiece* include slightly better handling of low-frequency words and a guarantee that the full word can be reconstructed by concatenating tokens (minus the “##”). The downside is that WordPiece training is a bit more complex (requires a likelihood computation) and it’s still deterministic – given the same vocabulary, there’s only one way to tokenize a new word (no randomness). BERT’s success showed that WordPiece tokenization yields rich representations; for example, BERT’s vocabulary has ~30k tokens, including whole words and meaningful subwords like “##ing”, “##ed”, “un##” etc., which allow it to **generalize to unseen words by compositio (An Explanatory Guide to BERT Tokenizer – Analytics Vidhya) (An Explanatory Guide to BERT Tokenizer – Analytics Vidhya)27】.

LLaMA (SentencePiece BPE): LLaMA, an LLM developed by Meta AI, uses a tokenizer built with SentencePiece, which is an open-source library for unsupervised text tokenization. Specifically, LLaMA’s tokenizer is a BPE-based model implemented via SentencePiece, including a feature called byte-fallback for unknown charact24】. SentencePiece tokenization differs from pure BPE or WordPiece in that it doesn’t rely on whitespace or language-specific pre-tokenization – it treats the input text as a raw stream of characters (it even has a special “▁” symbol to represent spac36】. In LLaMA’s case, the tokenizer was trained on a large corpus, yielding a vocabulary of 32k subword tokens. Byte-fallback means that if a character isn’t in the vocabulary (which is rare, since byte-level coverage was included), the tokenizer can break it into byte values to ensure no text is un-tokeniza24】. In essence, LLaMA’s tokenizer is similar in spirit to GPT-2’s – it’s a BPE that can handle arbitrary input – but it was generated by the SentencePiece toolkit rather than Hugging Face’s BPE implementation. SentencePiece vs others: One key difference is that SentencePiece can implement either BPE or another algorithm called Unigram LM (more on this below), and it handles whitespace uniformly by prefixing an underscore-like glyph “▁” to indicate word boundaries. Models like T5 and ALBERT also use SentencePiece (T5 uses the Unigram model with 32k vocab, and ALBERT uses SentencePiece Unigram as well). The advantage of SentencePiece for LLMs is its language-independent approach – you don’t need to apply language-specific rules beforeh35】. This made it a popular choice for multilingual models or scenarios where consistent preprocessing is needed across languages.

Comparing Tokenization Methods – BPE vs WordPiece vs SentencePiece: All these methods are forms of subword tokenization, which aim to balance vocabulary size and text cover23】. Here’s a summary comparison in a table:

| Tokenization Method | Used By Models | Key Idea | Pros | Cons |

|---|---|---|---|---|

| Byte Pair Encoding (BPE) | GPT-2, GPT-3, RoBERTa, LLaMA | Iteratively merge the most frequent pair of symbols (characters or bytes) into a new to61】. Continue until a fixed vocab size is reached. | – Handles out-of-vocabulary words by breaking them into known subwo ([Tokenizers BpeTrainer Overview | Restackio](https://www.restack.io/p/tokenizers-answer-bpe-trainer-cat-ai#:~:text=BPE%20is%20particularly%20effective%20because,to%20understand%20and%20generate%20text))00】. – Efficiently compresses common character sequences, reducing overall token count. |

| WordPiece | BERT, DistilBERT, Electra | Greedy algorithm that merges pairs of tokens which maximize the likelihood of the training data when inclu22】. Uses special prefix (e.g., “##”) for tokens that are continuation of a word. | – Tends to keep frequent words intact and only splits rare ones, providing a good bala08】. – By maximizing likelihood, it considers the impact of a merge, not just frequency, which can yield more meaningful subwo27】. | – Greedy merges mean initial training is a bit more complex (requires probability calculations). – Like BPE, it’s deterministic and cannot adapt tokenization based on context (only one fixed outcome per word with the given vocab). |

| SentencePiece (Unigram) | T5, ALBERT, GPT-J (and SentencePiece-BPE is used in LLaMA) | Uses a unigram language model: start with a large vocab of candidate subwords, then iteratively remove the weakest tokens until the vocab size is reac38】. Often treats input as a raw stream with a special space symbol (no pre-segmentation by spaces). | – Language-agnostic: no need for language-specific rules or even whitespace splitt35】. – Can encode text in multiple valid ways (the tokenizer can choose the most probable segmentation), enabling techniques like subword regularization to improve robustn95】. – Built-in handling of whitespace as a token, which simplifies preprocessing across languages. | – More complex algorithm and typically slower to train than BPE/WordPiece. – Requires storing a model (for probabilities) in addition to the vocab, to decode and sample segmentations. – If not carefully managed, the possibility of multiple segmentations could lead to slight inconsistencies (though usually the most likely segmentation is chosen for deterministic output). |

Table: Comparison of Tokenization Strategies – BPE vs WordPiece vs SentencePiece. All three yield subword vocabularies that let models handle unseen words by composing subword tokens. BPE is widely used (fast and effective), WordPiece was originally introduced for Google’s translation system and later BERT (with a slightly different merge criterion), and SentencePiece implements a unigram model that is especially useful for multilingual and domain-specific scenarios.

Different LLMs choose one of these methods based on their training objectives and data. For example, ChatGPT’s GPT-3 model (and GPT-4) use BPE (with some proprietary optimizations in newer versions), BERT uses WordPiece (hence you see “##” in its tokens), and LLaMA uses SentencePiece-BPE. Despite these differences, the end result is similar: any input text gets converted into a sequence of token IDs that the model looks up in an embedding table. It’s vital to use the correct tokenizer for a given model – feeding BERT text that was tokenized with GPT-2’s tokenizer, for instance, would produce token IDs that BERT’s embedding matrix doesn’t understand, leading to nonsense output.

Building a Tokenizer for Custom Models

Designing and implementing a tokenizer from scratch for a new deep learning model involves several key steps. A well-built tokenizer will match the needs of your dataset and model architecture. Here’s a step-by-step guide:

1. Choose the Right Tokenization Strategy: Decide between word-level, character-level, or subword tokenization. For most modern models, subword tokenization (like BPE, WordPiece, or SentencePiece) is preferred because it balances vocabulary size and the ability to handle unknown words. Your choice might depend on the language and domain:

- For morphologically rich languages (or multilingual corpora), subword methods are almost always beneficial (they can handle prefixes/suffixes).

- If you have a small, specific domain vocabulary (like DNA sequences or programming code), character-level might suffice, or a domain-specific subword vocabulary.

- Simpler tasks or very limited data might get by with word-level (but beware of out-of-vocabulary words).

Consider also whether you need byte-level handling (if your text includes lots of emojis or unusual symbols, byte-level BPE might be a good cho20】). Also decide if you need the ability to sample different tokenizations (if you plan to use augmentation techniques like subword regularization, the SentencePiece Unigram method would support t95】). In summary, pick a tokenizer type that fits your language/data: e.g., BPE for efficiency on large English text, WordPiece if you want a tried-and-true method with slightly refined merges, or SentencePiece if you need language-agnostic processing or an advanced unigram model.

2. Handle Special Tokens: Most NLP models require special reserved tokens in the vocabulary. Common special tokens include:

<unk>(Unknown token for any out-of-vocabulary item, if it ever occurs)<pad>(Padding token to align sequence lengths in a batch)<s>or[CLS](Start-of-sequence token, used in models like BERT for “classification start”)</s>or[SEP](End-of-sequence or separator token, to mark end of input or separate segments)<mask>(Mask token, if training a masked language model like BERT)

Decide which ones you need for your model. For example, a GPT-style model typically uses<|endoftext|>as a special end-of-text token, whereas BERT uses[CLS]and[SEP]. Ensure these tokens are never split by your tokenizer and are assigned fixed IDs (usually the first IDs in the vocab). The order you list special tokens can determine their (Tokenizers BpeTrainer Overview | Restackio)61】 (for instance, you might fix0:<unk>, 1:<pad>, 2:<s>, 3:</s>etc.). Handling special tokens also means configuring the tokenizer’s post-processing: e.g., a BERT tokenizer’s post-processing might automatically add[CLS]at the start and[SEP]at the end of every input sequence. If you’re using a tokenizer library, you can usually specify these tokens upfront so they’re taken into account during train (Tokenizers BpeTrainer Overview | Restackio)62】.

3. Create and Train the Vocabulary: Once strategy and special tokens are set, gather a representative corpus of text. The corpus should be large and diverse enough to capture the language patterns your model needs to learn. Then, use a tokenizer training algorithm to learn the vocabulary:

- For BPE/WordPiece: Initialize the vocabulary with all characters present in the cor (Tokenizers BpeTrainer Overview | Restackio)58】. Then iteratively merge tokens. BPE will merge the most frequent pair at each s61】, whereas WordPiece will merge based on likelihood g19】. Continue until you reach the desired vocabulary size (a hyperparameter you choose, often 30k-50k for large models, smaller for niche tasks).

- For SentencePiece Unigram: Start with a large initial list of substrings (this could be all words plus substrings). Iteratively remove those that least impact a compression-style objective until the vocab is down to your target s47】.

During training, you’ll also want to apply normalization and pre-tokenization. Normalization could include lowercasing, Unicode normalization, stripping accents, etc., to standardize t01】. Pre-tokenization (if using BPE/WordPiece) often involves splitting on whitespace and punctuation so that the subword algorithm doesn’t merge across word boundaries incorrec (Complete Guide to Subword Tokenization Methods in the Neural Era)83】. (SentencePiece often does this internally by including a special underscore for spaces). Many modern tokenizers also include rules to handle numbers, dates, or URLs specially if needed (depending on your use case, you might decide to keep numbers as whole tokens for example).

You can train using libraries like Hugging Face Tokenizers or SentencePiece. For instance, using Hugging Face’stokenizerslibrary, you could do:

from tokenizers import Tokenizer, models, trainers, pre_tokenizers

# Initialize a BPE tokenizer (you could swap to WordPiece or Unigram here)

tokenizer = Tokenizer(models.BPE())

# Set a pre-tokenizer to handle basic splitting by whitespace

tokenizer.pre_tokenizer = pre_tokenizers.Whitespace()

# Define special tokens and trainer

special_tokens = ["[UNK]", "[CLS]", "[SEP]", "[PAD]", "[MASK]"]

trainer = trainers.BpeTrainer(vocab_size=30000, min_frequency=2, special_tokens=special_tokens)

# Train on a corpus (could be a file or an iterator of text)

files = ["path/to/your/dataset.txt"] # your training corpus path

tokenizer.train(files=files, trainer=trainer)

tokenizer.save("custom-tokenizer.json")

In this example, we train a BPE tokenizer with a vocab of 30k tokens, ignoring any subword that appears less than tw (Tokenizers BpeTrainer Overview | Restackio)61】. Special tokens are included so they get reserved IDs. After training, the resulting "custom-tokenizer.json" can be loaded to encode new texts. Note: Training a tokenizer can take time for a large corpus, but libraries like Hugging Face’s optimize this in C++ under the hood for speed. Also, remember to store the tokenizer (vocab and merge rules or model file) – you’ll need it to preprocess future data exactly the same way.

4. Integrate the Tokenizer with Your Model: Once trained, your tokenizer defines how text turns into ids, and your model’s embedding layer will typically be of size (vocab_size, embedding_dim). It’s crucial that the model’s embedding layer size matches the tokenizer’s vocab size. You’ll load the tokenizer in your training pipeline to encode all input text (and possibly decode outputs if needed). Take care of the following:

- Padding and Truncation: If you’re batching sequences, use the tokenizer to pad sequences to a fixed length (or use dynamic padding per batch). The

<pad>token (or equivalent) should be ignored by your model’s loss function (e.g., masked in attention mechanisms). - Special token usage: Ensure that when you feed data to the model, you are adding any required special tokens. For example, for a sequence-pair classification, you might need

[CLS] sentence1 [SEP] sentence2 [SEP]as the input format for BERT. Your tokenizer can often handle this via itsencode_plusor similar method by settingadd_special_tokens=True. - Testing the tokenizer: Try encoding some sample sentences and decoding them back to text to verify it works as expected (the decoded text may not be exactly the original due to normalization, but it should be semantically equivalent except for maybe casing or removed accents). Also ensure that common words aren’t over-splitting and that rare but important terms are reasonably split. It’s an iterative process – you might adjust vocab size or normalization rules and retrain the tokenizer if the initial results aren’t satisfactory.

By following these steps, you design a tokenizer that is tailored to your new model. In practice, many developers start from an existing tokenizer (for instance, GPT-2’s or BERT’s) and refine it with new d22】, especially if they want to leverage known good configurations (this saves time and ensures compatibility with architectures expecting certain special tokens). But building from scratch as above is very useful when your domain data is very different from anything available or when creating a new language model from the ground up.

Tokenizing Data for Fine-Tuning LLMs

When fine-tuning a pre-trained LLM (like fine-tuning GPT-3, BERT, etc. on custom data), proper tokenization of your dataset is essential. You generally should not change the tokenizer of the model – instead, adapt your data to the model’s existing tokenizer. Here’s how to approach it:

- Use the Pre-trained Model’s Tokenizer: Always load the same tokenizer that was used in the pre-training of the model. For example, if you fine-tune BERT, use

BertTokenizer.from_pretrained('bert-base-uncased'). The reason is that the model’s embedding layer is tied to that tokenizer’s vocabulary indices. If you were to tokenize text differently or use a different vocab, the token IDs would not align with the embeddings the model lear (huggingface – save fine tuned model locally – and tokenizer too? – Stack Overflow) (huggingface – save fine tuned model locally – and tokenizer too? – Stack Overflow)88】. As one Stack Overflow answer succinctly puts it: *“The tokenizer cannot be affected by fine-tuning. The tokenizer converts the tokens to vocabulary indices which need to remain the same… otherwise, it would not be possible to train the static embedding at the beginning of the BERT computation (huggingface – save fine tuned model locally – and tokenizer too? – Stack Overflow)91】. In short, keep the tokenizer fixed during fine-tuning. - Preprocess Your Text: Fine-tuning datasets often come from raw text sources (tweets, custom transcripts, etc.) that might need cleaning. Apply similar preprocessing that was likely used in pre-training. For instance, if the model is uncased (like BERT uncased), you should lowercase your text before tokenization (the tokenizer might do this for you, but ensure it’s consistent). Remove any special characters that the model might not handle, though most modern tokenizers (byte-level) will handle them. If your data has things like URLs or emails, consider if you want to replace them with placeholder tokens or keep them – think about how they might appear in the model’s original training data. Sentence splitting isn’t usually necessary because the tokenizer and model can handle full sequences, but if you have extremely long texts, you might split them into chunks within the model’s max token limit.

- Match Tokenizer Settings: When encoding your fine-tuning data, use the tokenizer’s parameters that match how the model was trained. For example, GPT-2 models don’t have a special “end of sequence” token that needs adding for single sequences, but BERT requires

[CLS]at start and[SEP]at end – the Hugging Face tokenizer will do this if you useencode_plus/__call__withadd_special_tokens=True. Also, use the same truncation/padding approach. If the model was trained with sequences up to 512 tokens, you should typically truncate longer inputs to 512 (unless you are using a model that can handle longer). In code, you might do something like:

encodings = tokenizer(batch_texts, padding=True, truncation=True, max_length=512)

This will ensure each sequence is tokenized to IDs, padded to equal length, and truncated if too long, according to the tokenizer’s logic. Padding should use the model’s pad token id (which the tokenizer knows, e.g., tokenizer.pad_token_id). Ensure the attention mask is set so the model knows which tokens are padding.

- Common Pitfalls to Avoid: One pitfall is inadvertently changing the tokenizer. For example, one might think to “improve” the tokenizer by adding new tokens for domain-specific words before fine-tuning. Be cautious: adding tokens is possible but then you must resize the model’s embedding layer to match, and the new tokens’ embeddings start untrained (often initialized randomly). If you have a lot of new vocabulary that the model didn’t see, this can be useful, but if it’s only a few, the model might handle them as is via subwords. Another pitfall is mismanaging special tokens – e.g., forgetting to add a

[SEP]between a question and answer if the model expects it. Always refer to the documentation or configuration of the model to see what special tokens it uses. For instance, when fine-tuning a QA model, it might expect input like “[CLS] question [SEP] context [SEP]”. The tokenizer can often add these if you pass both strings to it properly. Finally, watch out for encoding errors: ensure the text is in the correct encoding (UTF-8) so that the tokenizer can handle it. - Efficiency Tip: Use the tokenizer’s batch encoding methods and consider multiprocessing if your dataset is large. Hugging Face’s tokenizers are fast (especially the Rust-implemented ones) and can handle batches of text quickly. This will save time in preprocessing before fine-tuning.

By carefully tokenizing your fine-tuning data with the model’s own tokenizer and mirroring the original setup, you help the model adapt to your data smoothly. The fine-tuning process will then adjust the model’s weights for your task, but the “input interface” (i.e., how text is represented as tokens) remains consistent and reliable.

Tokenization for Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation (RAG) is an architecture that combines an information retrieval component with a generative model. In a RAG pipeline, tokenization plays a role in both the indexing of documents and in query processing for the generative model.

When building a RAG system, you typically have a large set of documents (or knowledge chunks) that you index in a vector database. These documents are often transformed into embeddings using a language model – and to get embeddings, the text must be tokenized and fed into an encoder model (like a sentence transformer or BERT). Consistent tokenization between the stored documents and incoming queries is key: you want the embedding model to represent them in the same vector space. For example, if you use SentenceTransformer('all-MiniLM-L6-v2') as your embedding model, you should use its tokenizer to encode both documents and queries before embedding. If the documents were encoded with a different tokenizer/model than the one used for queries, the retrieved results might be irrelevant because the vector spaces won’t match.

Tokenization also affects how you chunk documents for indexing. It’s common to split documents into chunks of a certain token length (e.g., 256 tokens or 512 tokens per chunk) before embedding, to ensure chunks fit within the model’s input size and contain semantically coherent information. Here, using tokens (rather than characters or words) to measure chunk size is practical because model input limits are measured in tokens. You might use the tokenizer to count tokens and split long texts at sentence boundaries when the token count exceeds a threshold.

Once documents are indexed (each as an embedding vector), a typical RAG query flow is: tokenize the incoming user query, generate its embedding, retrieve the nearest document embeddings, and feed the retrieved text + original query into the generative model to produce an ans20】. At query time, tokenization helps in two places:

- Forming the query embedding: The user’s query (e.g., “What are the health benefits of green tea?”) is tokenized and passed through the embedding model to get a vector. Techniques like lowercasing or removing punctuation might be applied via the tokenizer to match how documents were embedded. The quality of retrieval can depend on this – e.g., if the query has a typo, a robust tokenizer/embeddings might still handle it by breaking it into subwords.

- Feeding the generative model: The retrieved text snippets are often concatenated with the original query to form a prompt for a generative LLM (like feeding: “Query: … [retrieved text] … Answer:” to GPT-3). This final prompt is tokenized by the generative model’s tokenizer (which could be different from the retriever’s tokenizer if they are different models). Here you must be careful to respect the generative model’s token limits; if the retrieved text is too long in tokens, you may need to truncate or select fewer passages. Some RAG implementations also insert special tokens or separators between documents in the prompt to clearly delineate them – those need to be chosen so they don’t conflict with the model’s tokenizer (often just newline characters or a custom separator token known to the model).

Efficient Indexing & Querying: Many RAG setups use vector databases (like FAISS, Pinecone, etc.) to store embeddings. These work with fixed-size numeric vectors, not tokens directly, but the step to create those vectors is where tokenization matters. For example, if using a DPR (Dense Passage Retrieval) model, both questions and passages are tokenized with a BERT tokenizer and then encoded. The alignment of tokenization ensures that the dot product similarity is meaningful. It’s also worth noting that some retrieval systems still use traditional keyword search as part of RAG (hybrid systems). In such cases, tokenization (often simpler, like lowercasing and splitting on spaces, perhaps with stemming) is used for the keyword index, while a separate tokenizer is used for the neural model. This dual-tokenization approach can be tricky, but if done, you handle each separately.

Below is a diagram of a typical RAG process, showing how a user query is processed through embedding, retrieval, and generation steps. The Embedding layer tokenizes the query and converts it into a vector. The retrieval system pulls documents (which were indexed via tokenization + embedding). Then the generative model uses tokenization again to intake the combined prompt (query + retrieved text) and produce a final answer (What is Retrieval Augmented Generation (RAG)? The Key to Smarter, More Accurate AI | DigitalOcean)ge】 RAG process pipeline: the user query is tokenized and embedded, relevant documents are retrieved (based on vector similarity), and a generative model combines the query with retrieved content (tokenized as input) to generate a respo20】.

In summary, tokenization in RAG ensures that the retrieval component (which might use transformer encoders) and the generation component (the LLM) both receive inputs in the form they expect. It’s a behind-the-scenes player: if your tokens are inconsistent, your vectors will be off, and your generative answers might be based on mismatched or irrelevant info. But when done right, tokenization in RAG allows seamless bridging of search and generation – the query and documents meet in the same vector space, and the language model can read the retrieved text fluently to give an informed answer.

Real-World Applications and Best Practices

Tokenization is employed in virtually all NLP applications. Let’s look at a few real-world use cases and highlight best practices in each:

- Chatbots and Conversational AI: In chatbot systems (like virtual assistants or customer support bots), tokenization is the first step when the user sends a message. The bot’s language model (be it GPT-based or a smaller transformer) tokenizes the user input to understand it and also tokenizes its own responses when generating text. Best practice here is to ensure the tokenizer can handle the conversational data specifics – for instance, users may use slang, typos, or emojis. A byte-level BPE tokenizer (like GPT-2’s) is a good choice because it won’t choke on an unknown emoji or an out-of-vocab acronym; it will break them into meaningful pieces or byte sequences. Another practice is to include some normalization like lowercasing only if the model is uncased; if the model is cased, don’t lowercase, as you’d lose information (e.g., “USA” vs “usa”). In building a custom chatbot, developers sometimes augment the tokenizer with domain-specific jargon – e.g., a company’s product names – to ensure they aren’t split oddly. This can improve the bot’s understanding of key terms (but remember to resize the model’s embeddings if you do this).

- Search Engines (Information Retrieval): Traditional search engines use tokenization too, though often simpler than neural models. They might use rule-based word tokenizers and normalization (like turning “running” into “run” via stemming, removing punctuation, etc.) to index documents and queries. In modern semantic search (using BERT or similar models for retrieval), subword tokenization is used to embed text into vectors. For search, a best practice is to remove or ignore very common tokens (stop words) in some pipelines to focus on meaningful terms – but if using a neural model, you typically feed the full tokenized text to the model and let it learn the importance (as transformers can figure out that “the” is not important via attention). For multilingual search, a tokenizer like SentencePiece that can handle multiple languages is useful. Ensuring that the query tokenizer and document tokenizer are the same (especially if using cross-encoders or dual encoders) is critical so that “résumé” in a query and “résumé” in a document match in tokens (potentially as “r”, “##é”, “##sum”, “##é” in WordPiece for example).

- Machine Translation: Translation models, from the early NMT systems to advanced ones like Google’s and OpenAI’s, rely on tokenization to break sentences into translatable units. Subword tokenization was a breakthrough for translation: the BPE method was introduced in a 2016 machine translation paper and showed huge improvements in translating rare wo (Complete Guide to Subword Tokenization Methods in the Neural Era) (Complete Guide to Subword Tokenization Methods in the Neural Era)88】. For example, a translation model might not know the German word “Donaudampfschifffahrtselektrizitätenhauptbetriebswerkbauunterbeamtengesellschaft” as a single token (who would!), but with BPE it can handle it as pieces and translate those. Best practices in MT include: use separate tokenization for source and target languages (each with their own vocab) unless it’s a multilingual model, handle casing and accent normalization (depending on languages), and possibly integrate tokenization with pronunciation for languages like Chinese (where tokenization might actually be segmentation into characters or phonetic units). Also, when training translation models, one might intentionally add tokens for things like proper nouns or copyable entities to ensure they are preserved.

- Text Summarization and Generation: In summarization tasks, tokenization helps handle long documents by breaking them into tokens that an encoder can process. Models like BART or T5 which are used for summarization have their tokenization strategies (BART uses byte-level BPE like GPT-2; T5 uses SentencePiece Unigram). A practical consideration is the length – you might need to truncate tokenized inputs if they exceed the model’s limit (e.g., older models can’t go beyond 1024 tokens). Tools exist to estimate how many tokens a text will produce, which is handy for deciding where to cut off or whether to use a model with a larger token window (like GPT-4 can handle ~8k to 32k tokens depending on version). Best practice: when chopping up text to fit a model, do it on logical boundaries (paragraphs or sentences) rather than blindly at the 1024th token, to avoid breaking the context in awkward ways.

- Sentiment Analysis / Text Classification: Even though classification tasks don’t generate text, they also start with tokenization. If using a pre-trained transformer like BERT for classification, the key is to replicate the exact tokenization it expects (including special tokens and max length). For production systems, one should also be mindful of speed: using the optimized

Fastversions of tokenizers (Hugging Face provides Fast tokenizers in Rust) can make a big difference in throughput when classifying millions of tweets, for instance. Another tip is to cache tokenized sequences if the same text is analyzed repeatedly (not always applicable, but if you have a fixed corpus, tokenizing once and storing IDs can save time later). - Speech Recognition & Multimodal systems: Tokenization isn’t only for text; in speech recognition, after converting audio to text, the text is tokenized before feeding to language models or for processing. Whisper, OpenAI’s ASR model, uses a tokenization similar to GPT-2’s (byte-level BPE) but with some tweaks for multilingual data. Best practice in such systems is to be careful with how punctuation or casing is introduced – often ASR outputs are lowercase without punctuation, and a post-process inserts those. The insertion might require the text to be tokenized in a way that the language model can handle. For multimodal (e.g., image+text) scenarios, tokenization is for the text part, and similar ideas apply (consistent, appropriate tokenization for any generated captions or recognized text).

Across these applications, a few general best practices stand out:

- Maintain Consistency: Always use the same tokenization procedure during training and inference. Inconsistencies (even something as simple as an extra space handling) can lead to unexpected differences.

- Monitor OOVs: Keep an eye on how often the tokenizer produces

[UNK](unknown) tokens on your data. Ideally, it should be very low (close to 0%). If you see a lot of[UNK], it means your vocabulary might not cover important terms in your dataset – consider extending the vocab or using a different strategy. - Optimize for Your Use Case: Tokenization can sometimes be a bottleneck in data pipelines. Use faster implementations or batch your tokenization calls. For deployment, you might even convert tokenization logic into a static lookup (for example, a C++ implementation or a stored index) if needed for extreme speed. Libraries like SentencePiece can tokenize strings in batches very quickly in C++.

- Be Mindful of Language and Domain: If your application deals with code (like GitHub Copilot, which is an LLM for code), the tokenizer might need to treat spaces or indentations differently (in fact, OpenAI trained Codex on a mix of bytes that include whitespace significance). For biomedical text, you might want a tokenizer that doesn’t split “HbA1c” into “Hb” + “##A1c” (perhaps you add “HbA1c” as a whole token). Domain adaptation sometimes requires custom tokenization rules.

Tokenization might seem like a small preprocessing detail, but in practice it’s intertwined with how effectively an NLP model learns and performs. A well-chosen tokenizer can make a model’s job easier by presenting linguistically meaningful pieces, while a poorly chosen one can hide patterns or confuse the model. Therefore, understanding and carefully setting up tokenization is a key skill for practitioners building real-world NLP systems.

Key Notes:

Tokenization is a fundamental yet nuanced component of NLP workflows. In this post, we learned that tokenization is the gateway from raw text to model-input – it defines how text is chopped into tokens and ultimately mapped to numeric IDs. We explored how major language models rely on different tokenization strategies, from GPT’s byte-level BPE ensuring robustness to any in20】, to BERT’s WordPiece carefully balancing vocabulary with corpus likelih21】, to SentencePiece’s language-independent subword models bringing flexibility and consistency across langua35】. We went through the process of building a tokenizer from scratch, emphasizing choosing the right approach for your data and taking care of special tokens and vocabulary training. We also covered practical advice for fine-tuning, where keeping the tokenizer consistent is paramo (huggingface – save fine tuned model locally – and tokenizer too? – Stack Overflow)91】, and looked at tokenization’s role in advanced applications like Retrieval-Augmented Generation, where it underpins both document indexing and query answering.

Key takeaways: Always align your tokenizer with your model – a mismatch can break a model completely or subtly degrade performance. Subword tokenization is the de-facto standard for modern NLP because it handles the open vocabulary problem so w (Tokenizers BpeTrainer Overview | Restackio)00】, but be mindful of the differences between BPE, WordPiece, and Unigram models when deciding which to use. When customizing, use tools and libraries to your advantage (training a tokenizer is much easier now with off-the-shelf libraries). And never underestimate the impact of tokenization: something as small as how you split on a punctuation mark can have downstream effects on what a model learns.

For developers working with NLP models, ingoampt recommendation is to spend time understanding your tokenizer. Inspect examples of tokenized output, try out edge cases (emoji, rare words, different languages if applicable), and ensure the tokenization aligns with your task needs. If your use case involves special formats (like math expressions, code, or URLs), consider whether the tokenizer handles them or if you need a preprocessing step. In fine-tuning or RAG setups, double-check that you’re using the correct tokenizer and settings – a lot of issues can be traced back to tokenization inconsistencies.

By mastering tokenization, you gain a deeper control over your NLP models. It might seem like a low-level detail compared to model architectures or algorithms, but it’s the first link in the chain of NLP inference. A strong chain starts with a solid first link, and in NLP that is tokenization. Armed with the knowledge from this guide, you should be well-equipped to make informed decisions about tokenization in your projects – leading to more effective and reliable language model applications.

Final Explanation with Example To Understand Better

Step-by-Step Explanation of Deep Learning Training (with Tokenization) for LLMs

| Step | Detailed Explanation | Simple Example |

|---|---|---|

| 1 | Collecting Raw Text Data Gather text data from various sources (books, websites, etc.). | "Hello world! Welcome to NLP." |

| 2 | Text Preprocessing Clean and standardize the text (e.g., lowercase, remove special characters if necessary). | "hello world! welcome to nlp." |

| 3 | Tokenization Break text into smaller units called tokens. Using a WordPiece tokenizer (like BERT): frequent words remain whole, rare or new words split into smaller pieces with ## prefix. | "hello world ! welcome to nlp ." → ["hello", "world", "!", "welcome", "to", "nl", "##p", "."] |

| 4 | Token ID Conversion Each token maps to a unique numerical ID (based on a vocabulary). The tokenizer provides this mapping. | ["hello", "world", "!", "welcome", "to", "nl", "##p", "."]→ [7592, 2088, 999, 6160, 2000, 2400, 2361, 1012] |

| 5 | Creating Embeddings Token IDs are converted into embedding vectors (numerical representations capturing meaning). Each token ID corresponds to one row in an embedding matrix. | For "hello" (token ID: 7592), a hypothetical embedding vector (of size 4 for simplicity) could be:[0.21, -0.14, 0.36, 0.09]"world" (token ID: 2088): [0.05, 0.20, -0.13, 0.45]"welcome" (6160): [0.11, -0.31, 0.02, 0.07]and so forth for each token. |

| 6 | Transformer Layers (e.g., GPT or BERT) The embedding vectors pass through transformer layers. Attention mechanisms analyze each token’s relation to every other token, creating “context-aware” embeddings. | "hello" embedding now includes context from "world", "welcome", etc., resulting in a new, context-aware vector (still same length, e.g., [0.22, -0.05, 0.03, 0.10]). |

| 7 | Output Prediction (Next Word or Masked Prediction) The model predicts tokens (next word, masked words, etc.) by producing probabilities for every token in the vocabulary. | Given "welcome to", the model predicts probabilities like:"nlp": 0.75, "machine": 0.10, "world": 0.05, "python": 0.03, others: 0.02 each... |

| 8 | Calculating Loss Compare predictions to the true target. This comparison uses a loss function (e.g., cross-entropy loss). | Target token is "nlp" (token ID: 2361). Model prediction probabilities:"nlp": 0.75, "ml": 0.10, "ai": 0.05, "data": 0.05…Cross-entropy loss measures how well the model predicted the correct token (high probability = low loss, incorrect predictions = higher loss). |

| 8 | Backpropagation (Updating Model Weights) The calculated loss generates signals (gradients) that guide how weights in the model change. | Gradients derived from the loss value propagate back, updating weights in layers such as embeddings, attention, and fully connected layers to improve future predictions. |

| 8a | Weight Updates Using optimizers (like Adam), weights slightly adjust towards more accurate predictions. | Embedding for token "welcome" might slightly change from [0.11, -0.31, 0.02, 0.07] to [0.12, -0.30, 0.01, 0.08]. |

| 9 | Repeat Iteratively (Epochs) The entire dataset is processed multiple times to progressively improve accuracy. | Repeat steps (2–8a) across many sentences and many epochs, steadily refining model predictions. |

| 10 | Fine-tuning (Optional) After pre-training, fine-tune the model on a specific task with smaller datasets. Crucially, use the same tokenizer. | If fine-tuning ChatGPT on medical data, new data such as "COVID vaccine" must tokenize exactly as pre-trained (["co", "##vid", "va", "##ccine"], not differently). |

Visual Diagram of the Entire Training Pipeline:

Raw Text

|

▼

Preprocessing

("Hello World!")

|

▼

Tokenizer (WordPiece or BPE)

["hello", "world", "!"]

|

▼

Token IDs Conversion

[7592, 2088, 999]

|

▼

Embedding Layer

[[0.01, 0.12,...], [0.15, -0.01, 0.23], [0.05, -0.09, 0.12]]

|

▼

Transformer Encoder (Attention & Context)

Updated Token Embeddings (Context-aware)

|

▼

Output Layer (Prediction)

Probabilities of Next Token:

hello: 0.05, NLP: 0.75, AI: 0.10, ...

|

▼

Loss Function (Cross-entropy)

|

▼

Backpropagation (Compute Gradients)

Update Weights in Embeddings & Transformer

|

▼

Repeat for many epochs

(Training & Fine-tuning)

Simple Practical Example of Tokenization & ID Mapping (for clarity):

| Raw Text Example | "I love NLP" |

|---|---|

| Tokenizer Output (WordPiece) | ["i", "love", "nl", "##p"] |

| Token IDs | [200, 1050, 2400, 2361] |

| Embeddings (hypothetical vectors) | [0.01, 0.05, 0.03, 0.10], [0.25, -0.30, 0.08, 0.11], etc. |

Important Concepts here:

- Tokenization: Essential preprocessing step converting text into numerical tokens for model understanding.

- Embeddings: Numerical vectors capturing the semantic meaning of tokens.

- Transformer Layers: Build context and relationships between tokens (attention mechanism).

Now what question is best to ask ?

Question :

if I wanna fine tune a LLM model, can I use any tokenize type I want or we must use the same type of the LLM model was tokenized? what if we wanna use a LLM with RAG instead of fine tune ?

Answer :

When fine-tuning a Large Language Model (LLM), it is crucial to use the same tokenizer that was employed during the model’s initial training. This ensures consistency in how text is processed into tokens, maintaining alignment between the input data and the model’s learned representations.

Why Must the Same Tokenizer Be Used for Fine-Tuning?

- Consistency in Tokenization: Different tokenizers may segment text differently. Using the original tokenizer ensures that the model interprets the input data in the same manner as during its initial training.

- Vocabulary Alignment: The model’s embeddings are tied to specific token IDs generated by its original tokenizer. A different tokenizer could produce mismatched token IDs, leading to ineffective or erroneous embeddings.

- Performance Optimization: Consistency in tokenization contributes to stable and predictable model performance, as the model’s parameters are optimized for the tokenization scheme it was trained on.

Using an LLM with Retrieval-Augmented Generation (RAG) Without Fine-Tuning

Retrieval-Augmented Generation (RAG) enhances LLMs by integrating them with external knowledge bases, allowing models to access up-to-date or domain-specific information beyond their static training data. This approach can be implemented without fine-tuning the LLM itself.

Key Considerations for Using LLMs with RAG Without Fine-Tuning:

- Data Indexing: Convert external documents into embeddings and store them in a vector database. This process enables efficient retrieval of relevant information based on user queries.

- Query Processing: When a user submits a query, it is transformed into an embedding, which is then used to search the vector database for pertinent documents.

- Context Augmentation: The retrieved documents are combined with the original query to provide additional context, which is then fed into the LLM.

- Response Generation: The LLM generates a response that incorporates both its internal knowledge and the external information retrieved, resulting in more accurate and contextually relevant outputs.

Advantages of Using RAG Without Fine-Tuning:

- Access to Up-to-Date Information: RAG allows the model to incorporate recent or domain-specific data without the need for retraining.

- Cost and Time Efficiency: Avoids the computational expense and time associated with fine-tuning large models.

- Flexibility: Enables the model to adapt to various topics by retrieving relevant information as needed, without altering the model’s parameters.

Tokenization in RAG Systems:

RAG systems enhance LLMs by retrieving relevant information from external knowledge bases to provide up-to-date and contextually accurate responses. In this setup, tokenization occurs at two primary stages:

- Indexing External Data:

- Chunking and Embedding: External documents are divided into manageable chunks and transformed into embeddings—vector representations capturing semantic meaning. This process often involves tokenization to ensure accurate embedding generation.

- Tokenizer Choice: The tokenizer used here can differ from the LLM’s original tokenizer. The primary goal is to create effective embeddings for the retrieval system, and the choice of tokenizer depends on the retrieval mechanism’s design and requirements.

- Query Processing and Response Generation:

- User Query Tokenization: When a user submits a query, it’s tokenized using the LLM’s original tokenizer to maintain consistency with the model’s training.

- Retrieval and Augmentation: The system retrieves relevant information using the retrieval system’s tokenization method and augments the query with this information.

- LLM Input: The augmented input is tokenized using the LLM’s original tokenizer before being fed into the model for response generation.

Key Considerations:

- Tokenizer Consistency for LLM Input: Any text input to the LLM must be tokenized using the same tokenizer employed during the model’s training. This consistency ensures that the model interprets the input correctly, preserving the integrity of its internal representations.

- Flexibility in Retrieval System Tokenization: The retrieval component of a RAG system can utilize a different tokenization strategy suitable for its indexing and search algorithms. This flexibility allows optimization of the retrieval process without affecting the LLM’s performance.

Conclusion for answering the questions :

In RAG implementations without fine-tuning the LLM, it’s imperative to use the LLM’s original tokenizer for any input processed by the model. However, the retrieval system’s tokenization can differ, tailored to its specific needs. Ensuring this distinction maintains system efficiency and effectiveness, leveraging external data to enhance the LLM’s responses without compromising its foundational processing mechanisms.