Understanding Regularization in Deep Learning – Day 47

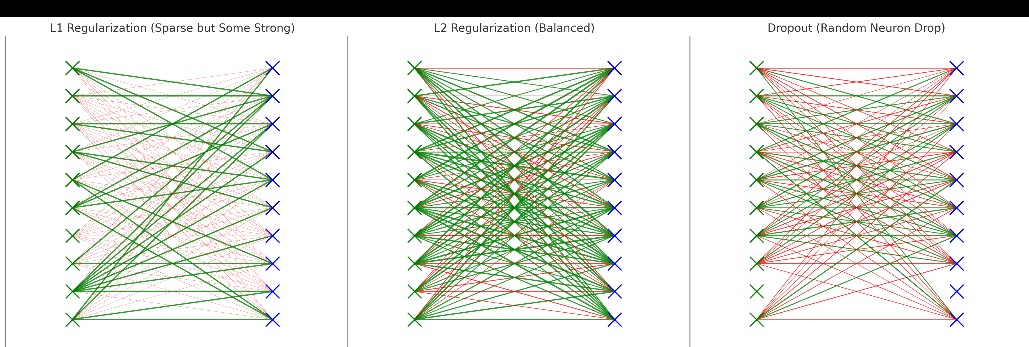

Understanding Regularization in Deep Learning – A Mathematical and Practical Approach Introduction One of the most compelling challenges in machine learning, particularly with deep learning models, is overfitting. This occurs when a model performs exceptionally well on the training data but fails to generalize to unseen data. Regularization offers solutions to this issue by controlling the complexity of the model and preventing it from overfitting. In this post, we’ll explore the different types of regularization techniques—L1, L2, and dropout—diving into their mathematical foundations and practical implementations. What is Overfitting? In machine learning, a model is said to be overfitting when...