Adam vs SGD vs AdaGrad vs RMSprop vs AdamW – Day 39

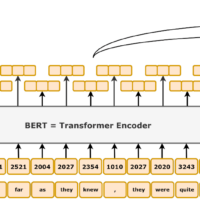

Choosing the Best Optimizer for Your Deep Learning Model When training deep learning models, choosing the right optimization algorithm can significantly impact your model’s performance, convergence speed, and generalization ability. Below, we will explore some of the most popular optimization algorithms, their strengths, the reasons they were invented, and the types of problems they are best suited for. 1. Stochastic Gradient Descent (SGD) Why It Was Invented SGD is one of the earliest and most fundamental optimization algorithms used in machine learning and deep learning. It was invented to handle the challenge of minimizing cost functions efficiently, particularly when dealing...