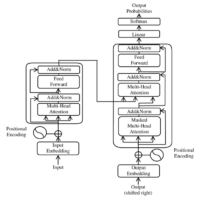

What is Machine Learning? Machine Learning (ML) is a subset of artificial intelligence (AI) that enables computers to learn from data and make decisions without being explicitly programmed. By identifying patterns and correlations in data, ML models can perform tasks such as prediction, classification, and optimization. For instance, Netflix uses machine learning to recommend shows and movies based on a user’s viewing history. ML has revolutionized fields like healthcare, finance, e-commerce, and robotics by automating complex decision-making processes and enabling systems to adapt to new information. The fundamental idea of machine learning is that machines can improve their performance over time by learning from data. Instead of hardcoding specific rules, ML algorithms create models that adjust themselves to improve accuracy and efficiency through experience. What is Deep Learning? Deep Learning (DL) is a specialized subset of machine learning inspired by the structure and functioning of the human brain. It uses artificial neural networks (ANNs) with multiple layers (hence the term “deep”) to process and analyze large volumes of complex data. Deep learning is particularly effective for tasks such as image and speech recognition, natural language processing, and autonomous driving. Compared to traditional machine learning, which often relies on manual feature engineering, deep learning models can automatically extract features from raw data. For example, while a machine learning model may require human-defined rules to analyze images, a deep learning model like a convolutional neural network (CNN) identifies edges, textures, and shapes autonomously. This ability to learn hierarchical representations of data makes deep learning uniquely powerful for handling unstructured data such as images, videos, and text. However, deep learning typically requires more computational resources and large datasets for effective training, which distinguishes it from traditional machine learning models that perform well on smaller datasets. Deep Learning (DL) is a specialized subset of machine learning inspired by the structure and functioning of the human brain. It uses artificial neural networks (ANNs) with multiple layers (hence the term “deep”) to process and analyze large volumes of complex data. Deep learning is particularly effective for tasks such as image and speech recognition, natural language processing, and autonomous driving. Deep learning models, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), can automatically extract features from raw data without manual intervention. This ability to learn hierarchical representations of data makes deep learning a powerful tool in domains requiring intricate analysis. While machine learning models often rely on structured data, deep learning excels with unstructured data, such as images, videos, and text. However, deep learning typically requires more computational resources and large datasets for effective training. Types of Machine Learning Machine learning is broadly classified into three main types, each addressing distinct kinds of problems and datasets. These classifications are significant because they determine how a model learns from data, whether it’s by mapping inputs to known outputs, discovering hidden patterns, or adapting through interaction. By understanding these types, practitioners can select the most appropriate approach for tasks such as predicting outcomes, segmenting data, or automating decision-making. Supervised Learning In supervised learning, the model is trained on a labeled dataset, where each input has a corresponding output. The algorithm learns to map inputs to outputs by minimizing the error between predicted and actual outcomes. Examples: Linear regression, logistic regression, support vector machines (SVM), and neural networks. Applications: Fraud detection, spam filtering, and predicting housing prices. Unsupervised Learning Unsupervised learning deals with unlabeled data. The model identifies patterns, structures, or clusters in the data without explicit supervision. Examples: K-means clustering, hierarchical clustering, and principal component analysis (PCA). Applications: Customer segmentation, anomaly detection, and recommendation systems. Reinforcement Learning In reinforcement learning, an agent learns to make decisions by interacting with an environment. The agent receives rewards or penalties based on its actions and optimizes its strategy to maximize cumulative rewards. Examples: Q-learning, deep Q-networks (DQN), and policy gradient methods. Applications: Game playing (e.g., AlphaGo), robotic control, and autonomous vehicles. some examples of new models & innovations in machine learning and deep learning from 2024 and 2025 Deep Learning Innovations: Quantum Convolutional Neural Networks (QCNNs) Combines quantum computing principles with CNNs for efficient high-dimensional data processing. Applications: Quantum data analysis, image recognition. Dissipative Quantum Neural Networks (DQNNs) Uses quantum perceptrons in a dissipative framework for robust quantum environment learning. Applications: Supervised learning with quantum…