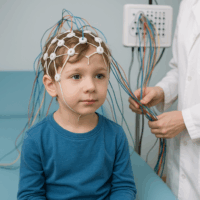

Understanding Labeled and Unlabeled Data in Machine Learning: A Comprehensive Guide In the realm of machine learning, data is the foundation upon which models are built. However, not all data is created equal. The distinction between labeled and unlabeled data is fundamental to understanding how different machine learning algorithms function. In this guide, we’ll explore what labeled and unlabeled data are, why they are important, and provide practical examples, including code snippets, to illustrate their usage. What is Labeled Data? Labeled data refers to data that comes with tags or annotations that identify certain properties or outcomes associated with each data point. In other words, each data instance has a corresponding “label” that indicates the category, value, or class it belongs to. Labeled data is essential for supervised learning, where the goal is to train a model to make predictions based on these labels. Example of Labeled Data Imagine you are building a model to classify images of animals. In this case, labeled data might look something like this: { "image1.jpg": "cat", "image2.jpg": "dog", "image3.jpg": "bird" } Each image (input) is associated with a label (output) that indicates the type of animal shown in the image. The model uses these labels to learn and eventually predict the animal type in new, unseen images. Code Example: Working with Labeled Data in Python Here’s a simple example using Python and the popular machine learning library scikit-learn to work with labeled data: In this example, the Iris dataset is a classic labeled dataset where each row of data represents a flower, and the label indicates its species. The model is trained to classify the species based on the features provided. What is Unlabeled Data? Unlabeled data, on the other hand, does not come with any labels. It consists only of input data without any associated output. Unlabeled data is crucial for unsupervised learning, where the model tries to find patterns, groupings, or structures in the data without predefined labels. Example of Unlabeled Data Continuing with our animal images example, unlabeled data would look like this: [ "image1.jpg", "image2.jpg", "image3.jpg" ] Here, the images are provided without any labels indicating what animal is in the picture. The goal in unsupervised learning would be to group these images based on similarities, perhaps clustering them into categories that a human might interpret as “cat,” “dog,” or “bird.” Code Example: Working with Unlabeled Data in Python Here’s a simple example of how you might use K-Means clustering to group similar unlabeled data points: In this example, although the Iris dataset includes labels, we pretend they don’t exist and use K-Means clustering to group the data into clusters. The model attempts to identify natural groupings in the data based on the features alone. The Importance of Labeled vs. Unlabeled Data The distinction between labeled and unlabeled data drives the choice of machine learning approach: Supervised Learning: Relies on labeled data to teach the model how to make predictions. Examples include classification and regression tasks. Unsupervised Learning: Involves finding patterns in data without any labels. Examples include clustering, anomaly detection, and association rule learning. Semi-Supervised Learning: Combines both labeled and unlabeled data, using a small labeled dataset to guide the learning process on a larger unlabeled dataset. Real-World Applications Labeled and unlabeled data have diverse applications across different fields: Healthcare: Labeled data is used to train models to diagnose diseases, while unlabeled data might be used in research to discover new patterns or anomalies in patient data. E-commerce: Labeled data helps in recommending products to users, while unlabeled data might be used to segment users into different groups for targeted marketing. Finance: Labeled data is used in fraud detection models, whereas unlabeled data might help in identifying emerging risks or market trends. Understanding the nature of your data—whether labeled or unlabeled—enables you to choose the right approach and tools for your machine learning project. With this foundational knowledge, you’re better equipped to dive into more advanced topics, such as the techniques for handling limited labeled data, which we’ll explore in the continue of this article : . Mastering Machine Learning with Limited Labeled Data: In-Depth Techniques and Examples In the world of machine learning, data is king. However, labeled data, which is crucial for training models, is often hard to come by. Labeling data is expensive, time-consuming, and sometimes impractical. Despite these challenges, machine learning has advanced to offer several powerful techniques that allow for effective learning even with limited labeled data. We guide you here to explores these techniques in detail, providing practical examples and insights to help you implement them successfully. 1. Unsupervised Pretraining Unsupervised pretraining is a cornerstone technique for dealing with limited labeled data. It involves initially training a model on a large set of unlabeled data to learn general features, which are then fine-tuned using a smaller set of labeled data. How It Works Pretraining on Unlabeled Data: The model is trained on unlabeled data to learn general features. For example, an autoencoder might be used to compress and then reconstruct images, thereby learning the key features of the images without needing labels. Fine-Tuning on Labeled Data: The pre-trained model is then fine-tuned with labeled data, adjusting its parameters for the specific task. Example In Natural Language Processing (NLP), models like BERT and GPT are pretrained on massive unlabeled text corpora. After this pretraining, they are fine-tuned on specific tasks like sentiment analysis or question answering using much smaller labeled datasets. Real-World Application The BERT model, developed by Google, is a prime example. BERT is first pretrained on a large text corpus using tasks like masked language modeling, where the model predicts missing words in a sentence. It is then fine-tuned on smaller datasets for specific NLP tasks, achieving state-of-the-art results in many benchmarks. 2. Pretraining on an Auxiliary Task Pretraining on an auxiliary task leverages a related task with abundant labeled data to improve performance on your main task, which has limited labeled data. How It Works Identify a Related Task: Find a related task…