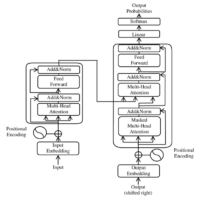

Understanding Gradient Clipping and Weight Initialization Techniques in Deep Learning In this part, we explore the fundamental techniques of gradient clipping and weight initialization in more detail. Both of these methods play a critical role in ensuring deep learning models train efficiently and avoid issues like exploding or vanishing gradients. Gradient Clipping: Controlling Exploding Gradients When training deep learning models, especially very deep or recurrent neural networks (RNNs), one of the main challenges is dealing with exploding gradients. This happens when the gradients (which are used to update the model’s weights) grow too large during backpropagation, causing unstable training or even model failure. Gradient clipping is a method used to limit the magnitude of the gradients during training. Here’s how it works and why it’s useful: How Gradient Clipping Works: During backpropagation, the gradients are calculated for each parameter. If a gradient exceeds a predefined threshold, it is scaled down to fit within that threshold. There are two main types of gradient clipping: Norm-based clipping: The magnitude (norm) of the entire gradient vector is computed. If the norm exceeds the threshold, the gradients are scaled down proportionally. Value-based clipping: If any individual gradient component exceeds a set value, that specific component is clipped to the threshold. Why Gradient Clipping Matters: Prevents Divergence: Large gradients can cause the model’s parameters to change too drastically, leading to divergence, where the model fails to learn anything meaningful. Stabilizes Training: By keeping the gradients under control, the model’s training process remains smooth and stable. Applications in RNNs and Deep Networks: RNNs and LSTMs, which deal with long sequences, are particularly prone to exploding gradients. Gradient clipping ensures that these models continue learning properly across many time steps. — Weight Initialization: Setting the Stage for Learning Weight initialization refers to the process of setting the initial values of a neural network’s weights before training. Proper weight initialization is crucial because it helps prevent two major problems: vanishing gradients (where gradients become too small, slowing learning) and exploding gradients (where gradients become too large, leading to unstable training). Here’s a breakdown of the most common weight initialization techniques: Zero Initialization: All weights are set to zero at the start of training. Problem: If all weights are the same, every neuron in the network will learn the same thing, leading to a symmetry problem where the network cannot learn effectively. Random Initialization: Weights are initialized randomly, usually from a normal or uniform distribution. This breaks the symmetry between neurons, allowing them to learn different things. Challenges: If weights are initialized too large, the network might suffer from exploding gradients. If too small, gradients may vanish, causing slow learning. Xavier (Glorot) Initialization: Xavier initialization is widely used for layers with sigmoid or tanh activations. It aims to keep the variance of the inputs and outputs of each layer consistent, which helps prevent vanishing or exploding gradients. Formula:where \(n_{\text{in}}\) is the number of input units and \(n_{\text{out}}\) is the number of output units. Impact: By maintaining a balanced variance across layers, Xavier initialization ensures that signals (and gradients) flow smoothly through the network during both forward and backward passes. He Initialization: He initialization is specifically designed for use with ReLU activation functions. ReLU neurons deactivate for negative inputs, so this method adjusts the variance to account for this. Formula:where \(n_{\text{in}}) is the number of input units. Advantage: He initialization prevents neurons from falling into “dead ReLU” states, where they stop learning due to poor initialization. – Why Gradient Clipping and Weight Initialization are Critical Gradient Clipping: Helps manage large gradients, preventing them from disrupting the learning process. This is especially important in deep networks and RNNs, which are prone to exploding gradients. Weight Initialization: Proper initialization ensures that gradients flow through the network correctly. Without it, networks may suffer from vanishing or exploding gradients, leading to poor performance and slow training. Techniques like Xavier and He initialization allow for better gradient propagation, ensuring faster convergence and better overall model performance. By combining these techniques, deep learning models can be trained faster and more reliably, even in complex architectures or challenging datasets. Practical Implementation of Gradient Clipping and Weight Initialization In this section, we will explore how to apply gradient clipping and weight initialization techniques in deep learning frameworks like **Keras** and **PyTorch**. These examples show how to use these methods to stabilize training and ensure efficient learning. Gradient Clipping in Keras Keras provides built-in options for clipping gradients during training. Gradient clipping can be performed either by norm or by value. 1. Gradient Clipping by Norm in Keras This method clips the gradients if their norm exceeds a given threshold, ensuring that gradients remain within a stable range. 2. Gradient Clipping by Value in Keras Gradient clipping by value restricts each individual gradient component to stay within a certain range, preventing large updates to model weights. Weight Initialization…

Thank you for reading this post, don't forget to subscribe!Exploring Gradient Clipping & Weight Initialization in Deep Learning – Day 44