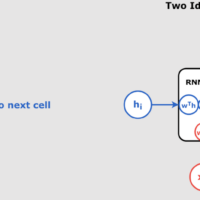

Time Series Forecasting with Recurrent Neural Networks (RNNs): A Complete Guide Introduction Time series data is all around us: from stock prices and weather patterns to daily ridership on public transport systems. Accurately forecasting future values in a time series is a challenging task, but Recurrent Neural Networks (RNNs) have proven to be highly effective at this. In this article, we will explore how RNNs can be applied to time series forecasting, explain the key concepts behind them, and demonstrate how to clean, prepare, and visualize time series data before feeding it into an RNN. What Is a Recurrent Neural Network (RNN)? A Recurrent Neural Network (RNN) is a type of neural network specifically designed for sequential data, such as time series, where the order of inputs matters. Unlike traditional feed-forward neural networks, RNNs have loops that allow them to carry information from previous inputs to future inputs. This makes them highly suitable for tasks where temporal dependencies are critical, such as language modeling or time series forecasting. How RNNs Learn: Backpropagation Through Time (BPTT) Understanding BPTT In a traditional feed-forward neural network, backpropagation is used to calculate how much each weight contributes to the error at each layer. In RNNs, the same weights are shared across time steps, so the backpropagation process is extended “through time.” Backpropagation Through Time (BPTT) calculates the gradients at each time step in the sequence and propagates the error backward through all previous time steps. This allows the RNN to adjust its weights based on the error at each time step, learning the temporal dependencies in the data. BPTT and Time Series Forecasting When using an RNN for time series forecasting, BPTT allows the network to learn from sequences of past values. For example, if you want to predict tomorrow’s ridership based on the past 7 days, the RNN will use BPTT to adjust its weights to minimize prediction error across all time steps. Preparing Time Series Data for RNNs Before we can feed time series data into an RNN, we need to clean and preprocess it. In this example, we’ll use ridership data from the Chicago Transit Authority (CTA) to demonstrate how to prepare a dataset for time series forecasting. Step 1: Loading and Cleaning the Data First, let’s load the data using Python’s pandas library. We will sort the data, remove unnecessary columns, and ensure there are no duplicates. import pandas as pd from pathlib import Path # Load the dataset path = Path(“datasets/ridership/CTA_Ridership_Daily_Boarding_Totals.csv”) df = pd.read_csv(path, parse_dates=[“service_date”]) # Rename columns for easier reference df.columns = [“date”, “day_type”, “bus”, “rail”, “total”] # Sort the data by date and drop unnecessary columns df = df.sort_values(“date”) df = df.drop(“total”, axis=1) # Drop the ‘total’ column (bus + rail) df = df.drop_duplicates() # Remove duplicate rows Explanation: Why Data Cleaning Matters for RNNs Chronological Order: Time series models rely on the chronological order of data, so sorting the data by date is crucial. Duplicate Removal: Duplicate entries can confuse the model, so we remove any duplicate rows to ensure the data is consistent. Column Simplification: Dropping unnecessary columns (like the total column) ensures that we don’t introduce redundant information into the model. Once the data is cleaned and structured, it’s ready to be used in an RNN for training. Visualizing Time Series Data Before feeding the data into an RNN, it’s useful to visualize it to identify patterns, trends, and seasonality. Here’s how you can visualize the ridership data: import matplotlib.pyplot as plt # Plot the time series data from March to May 2019 df[“2019-03″:”2019-05″].plot(grid=True, marker=”.”, figsize=(8, 3.5)) plt.show() Understanding the Plot: Weekly Seasonality The plot reveals clear weekly seasonality: ridership peaks during weekdays and drops on weekends. This repeating pattern is a key feature that the RNN will learn during training. By identifying these patterns, the RNN can make more accurate predictions for future ridership values. Making the Time Series Stationary with Differencing Many time series models, including traditional statistical models like ARIMA, require the data to be stationary (i.e., its mean and variance do not change over time). One way to make a time series stationary is…