UNLOCKING RNN, Layer Normalization, and LSTMs – Mastering the Depth of RNNs in Deep Learning – Part 8 of RNN Series by INGOAMPT – Day 62

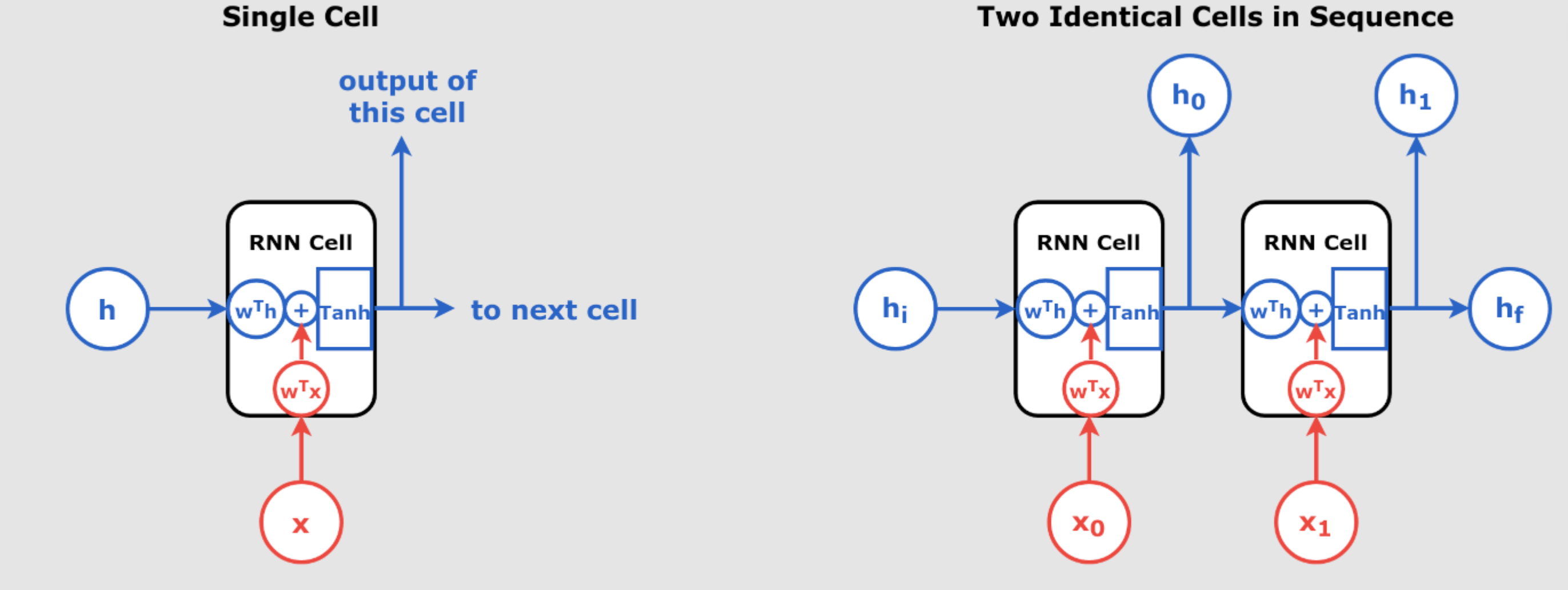

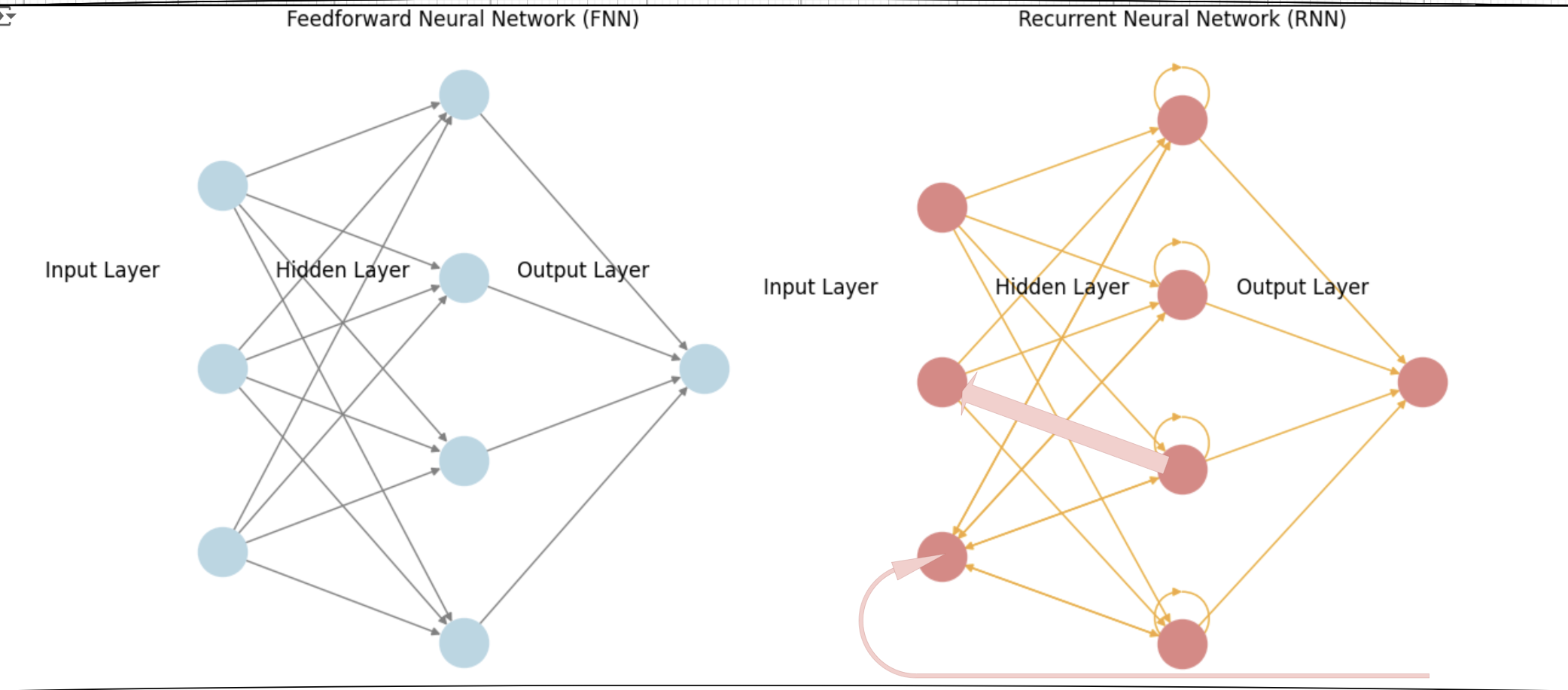

A Deep Dive into Recurrent Neural Networks, Layer Normalization, and LSTMs So far we have explained in pervious days articles a lot about RNN. We have explained, Recurrent Neural Networks (RNNs) are a cornerstone in handling sequential data, ranging from time series analysis to natural language processing. However, training RNNs comes with challenges, particularly when dealing with long sequences and issues like unstable gradients. This post will cover how Layer Normalization (LN) addresses these challenges and how Long Short-Term Memory (LSTM) networks provide a more robust solution to memory retention in sequence models. The Challenges of RNNs: Long Sequences and...