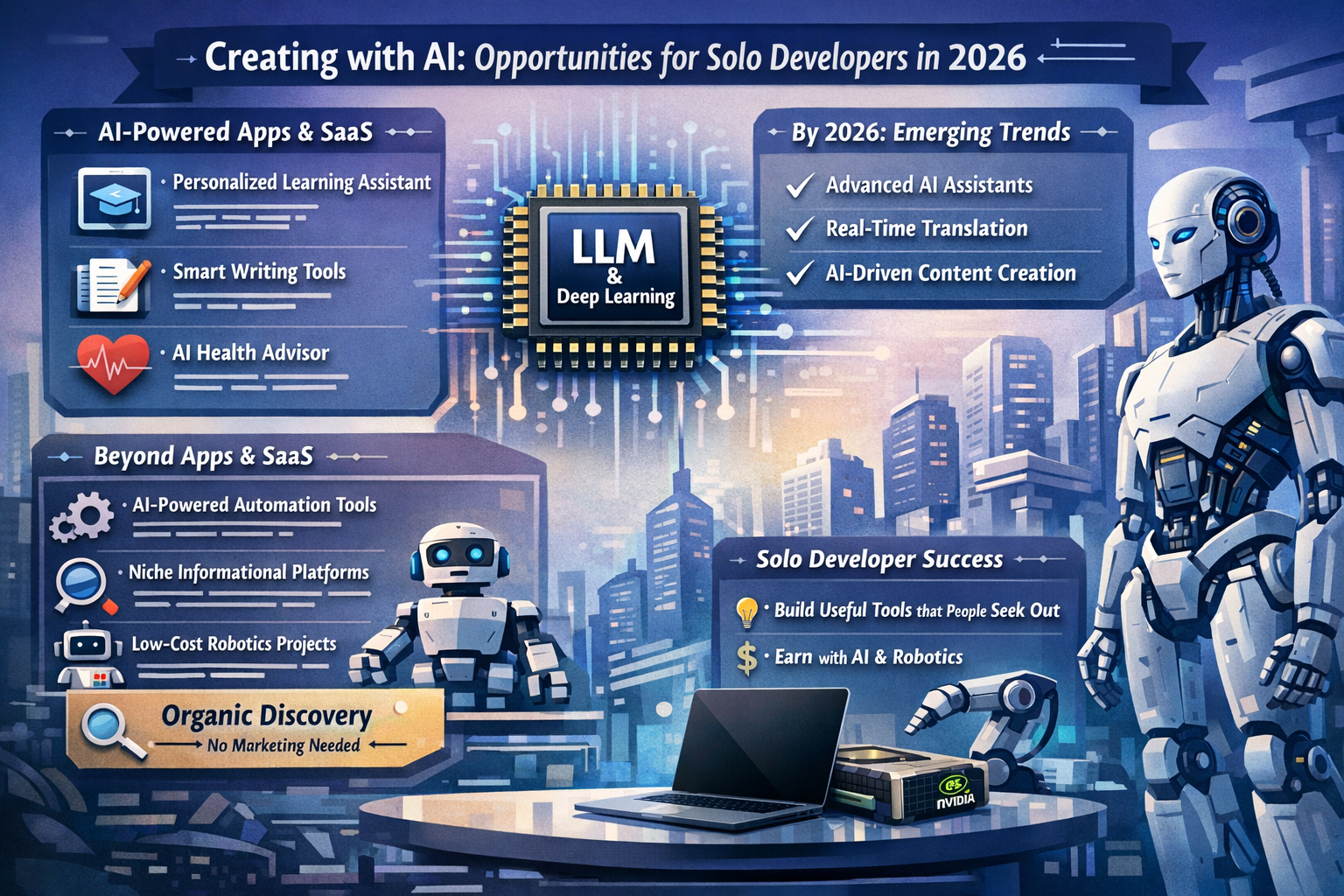

Solo-developer AI products : iOS, SaaS, marketplaces, and low-cost robotics

Solo-developer AI products in early 2026: iOS, SaaS, marketplaces, and low-cost robotics Executive synthesis Now days, The opportunity for solo developers has shifted from “build […]