New on 2024 – 2025 are MLX and Transofermers so lets compare Custom Deep Learning Models for iOS with MLX on Apple Silicon vs. PyTorch

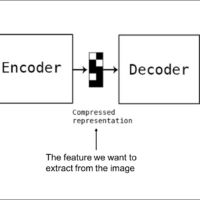

The development of deep learning applications for iOS has become increasingly sophisticated with Apple’s M-series chips, which allow for powerful local processing on mobile devices. As demand grows for high-performance, optimized models that can integrate seamlessly with iOS apps, Apple Silicon has paved the way for specialized tools like Machine Learning eXtensions (MLX). This blog post walks through the process of building a custom transformer model for an iOS app using MLX on Apple Silicon, compares it with a similar PyTorch-based approach, and explains how to deploy these models on iOS with Swift.

Overview of MLX and PyTorch on Apple Silicon

Apple Silicon’s M-series chips introduced a unified memory architecture and accelerated performance specifically suited for machine learning workloads. MLX is designed to leverage these hardware advantages, offering a streamlined API that simplifies the training and deployment process. PyTorch, while highly versatile, requires more steps to optimize and deploy models specifically for iOS.

Key Differences: MLX vs. PyTorch

- Performance Optimization: MLX is designed to maximize Apple Silicon’s hardware capabilities, with lazy computation and optimized memory management.

- Core ML Export: MLX enables direct Core ML export, whereas PyTorch typically requires a conversion through ONNX (Open Neural Network Exchange).

- Flexibility: PyTorch is widely compatible across various platforms, making it a go-to for general model development, while MLX is optimized exclusively for Apple Silicon.

Step-by-Step Guide: Building and Deploying a Transformer Model

Let’s dive into the step-by-step process of building and deploying a custom transformer model with both MLX and PyTorch.

Step 1: Installation

PyTorch

pip install torchMLX

pip install mlxMLX is built to capitalize on the architecture of M-series chips, offering an API similar to PyTorch but optimized for Apple hardware.

Step 2: Defining a Transformer Model

Both PyTorch and MLX use similar syntax for defining model architectures. Here’s how to create a simple transformer model in each:

PyTorch Code

import torch.nn as nn class TransformerModel(nn.Module): def __init__(self, vocab_size, embed_size, num_heads, num_layers): super().__init__() self.embedding = nn.Embedding(vocab_size, embed_size) self.transformer = nn.Transformer(embed_size, num_heads, num_layers) self.fc = nn.Linear(embed_size, vocab_size) def forward(self, x): x = self.embedding(x) x = self.transformer(x) return self.fc(x)MLX Code

import mlx.core as mx import mlx.nn as nn class TransformerModel(nn.Module): def __init__(self, vocab_size, embed_size, num_heads, num_layers): super().__init__() self.embedding = nn.Embedding(vocab_size, embed_size) self.transformer = nn.Transformer(embed_size, num_heads, num_layers) self.fc = nn.Linear(embed_size, vocab_size) def forward(self, x): x = self.embedding(x) x = self.transformer(x) return self.fc(x)Step 3: Setting Up the Training Process

With both PyTorch and MLX, you can set up the training process with an optimizer and loss function. Here’s an example of each:

PyTorch Code

import torch.optim as optim loss_fn = nn.CrossEntropyLoss() optimizer = optim.SGD(model.parameters(), lr=0.01) for epoch in range(num_epochs): optimizer.zero_grad() outputs = model(inputs) loss = loss_fn(outputs, targets) loss.backward() optimizer.step()MLX Code

loss_fn = nn.CrossEntropyLoss() optimizer = mx.optim.SGD(model.parameters(), lr=0.01) for epoch in range(num_epochs): optimizer.zero_grad() outputs = model(inputs) loss = loss_fn(outputs, targets) loss.backward() optimizer.step()MLX’s lazy evaluation ensures that tensors are only computed when necessary, reducing memory usage on Apple Silicon.

Step 4: Exporting to Core ML

One of the most significant differences between PyTorch and MLX emerges at the export stage.

PyTorch (Using ONNX and Core ML Conversion)

import torch.onnx torch.onnx.export(model, input_sample, "model.onnx")After converting to ONNX, use

coremltoolsto convert it to Core ML format:import coremltools as ct mlmodel = ct.convert("model.onnx", source="onnx") mlmodel.save("TransformerModel.mlmodel")MLX (Direct Core ML Export)

import coremltools as ct mlmodel = ct.convert(model, inputs=[ct.TensorType(shape=input_sample.shape)]) mlmodel.save("TransformerModel.mlmodel")MLX’s direct Core ML support simplifies this process, allowing developers to create iOS-compatible models faster and with fewer conversion errors.

Step 5: Integrating the Model with Swift in Xcode

Once the

.mlmodelfile is generated, you can integrate it directly into an iOS app in Xcode. The process is identical for models created with both PyTorch and MLX, as the.mlmodelformat is compatible with Apple’s Core ML framework.Swift Code for Model Integration

import CoreML import SwiftUI struct ContentView: View { let model = TransformerModel() func predict(input: [Float]) -> Float? { let inputMLArray = try? MLMultiArray(shape: [1, NSNumber(value: input.count)], dataType: .float32) for (i, value) in input.enumerated() { inputMLArray?[i] = NSNumber(value: value) } guard let prediction = try? model.prediction(input: inputMLArray!) else { return nil } return prediction.output } }Key Takeaways

- MLX Advantage: MLX simplifies exporting to Core ML by eliminating the need for ONNX. It also leverages Apple Silicon’s hardware more efficiently with features like lazy evaluation and optimized memory usage.

- Flexibility with PyTorch: PyTorch is versatile and works across different platforms, though it requires more steps to optimize for iOS and lacks MLX’s Apple-specific optimizations.

Whether you choose MLX or PyTorch depends on your target deployment and development needs. For Apple Silicon and iOS-specific applications, MLX offers streamlined, high-performance deployment. However, for projects needing cross-platform flexibility, PyTorch remains a strong choice, albeit with additional steps for iOS optimization.

| Step | PyTorch Code | MLX Code (Apple Silicon) |

|---|---|---|

| 1. Install | bash pip install torch |

bash pip install mlx |

| 2. Define Model |

|

|

| 3. Preprocess Data | Use libraries like torchtext or NumPy to tokenize text sequences. |

Similar data handling with NumPy, but MLX’s lazy evaluation reduces memory footprint. |

| 4. Define Optimizer | python |

python |

| 5. Train the Model | python |

pythonMLX handles computations on CPU and GPU seamlessly. |

| 6. Export Model | PyTorch requires exporting to ONNX and then converting to Core ML:python |

MLX allows direct Core ML export with coremltools:python |

| 7. Integrate in Swift | Import .mlmodel into Xcode. |

Same Core ML .mlmodel integration into Xcode; identical for both MLX and PyTorch-converted models. |

| 8. Swift Prediction | swift |

Same Swift code, as it relies on Core ML’s framework rather than the training library, allowing both models to operate identically in iOS. |