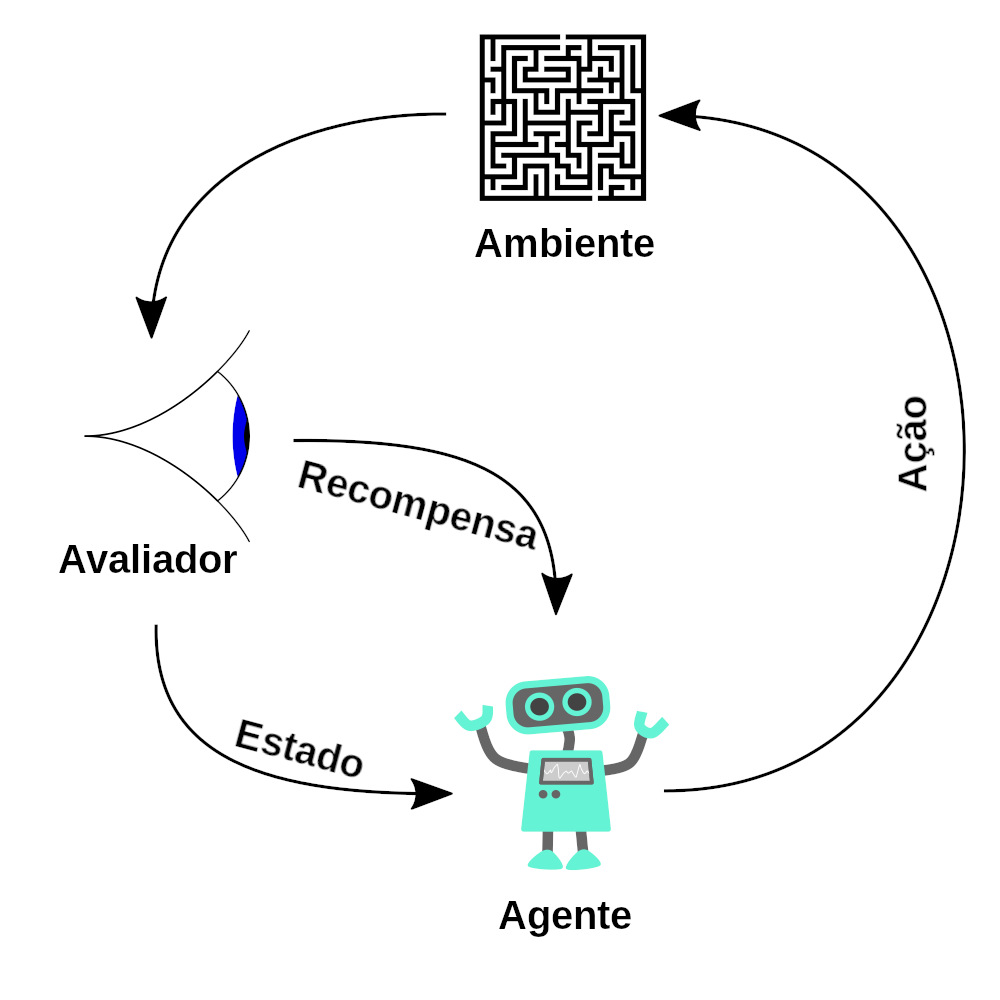

Reinforcement Learning: An Evolution from Games to Real-World Impact Reinforcement Learning: An Evolution from Games to Real-World Impact Reinforcement Learning (RL) is a fascinating branch of machine learning, with its roots stretching back to the 1950s. Although not always in the limelight, RL made a significant impact in various domains, especially in gaming and machine control. In 2013, a team from DeepMind, a British startup, built a system capable of learning and excelling at Atari games using only raw pixels as input—without any knowledge of the game’s rules. This breakthrough led to DeepMind’s famous system, AlphaGo, defeating world Go champions and ignited a global interest in RL. The Foundations of Reinforcement Learning: How It Works In RL, an agent interacts with an environment, observes outcomes, and receives feedback through rewards. The agent’s objective is to maximize cumulative rewards over time, learning the best actions through trial and error. Term Explanation Agent The software or system making decisions. Environment The external setting with which the agent interacts. Reward Feedback from the environment based on the agent’s actions. Examples of RL Applications Here are a few tasks RL is well-suited for: Application Agent Environment Reward Robot Control Robot control program Real-world physical space Positive for approaching target, negative for incorrect movement Atari Game Game-playing program Game simulation Points scored in the game Board Game (e.g., Go) Game-playing program Game board Reward only if it wins the game Smart Thermostat Thermostat control program Indoor temperature settings Positive for saving energy, negative if adjustments are needed by humans Stock Market Trading agent Financial market data Monetary gains and losses Defining Policy: The Agent’s Guide to Action The approach or “policy” an agent uses to decide its actions is central to RL. A policy can be as straightforward as a set of if-then rules or as complex as a deep neural network that analyzes observations to output actions. Policies can be deterministic or stochastic (random). In training an agent, policy search methods help identify effective policies, often through trial and error. Policy Search and Optimization Techniques Method Description Brute-Force Search Tries various policy parameters systematically, though it’s not feasible for complex policies. Genetic Algorithms Generates multiple policies, selects the best, and iterates through generations with random mutations. Policy Gradients (PG) Adjusts policy parameters by evaluating gradients based on rewards to optimize actions. Getting Started with OpenAI Gym Training RL agents requires environments where the agent can safely learn and test its policies. OpenAI Gym is a popular toolkit that offers simulated environments for RL training, from Atari games to physical simulations. By using OpenAI Gym, developers can safely experiment and refine RL agents without needing a physical setup. Setting Up OpenAI Gym To install and…

Reinforcement Learning: An Evolution from Games to Real-World Impact – Day 78