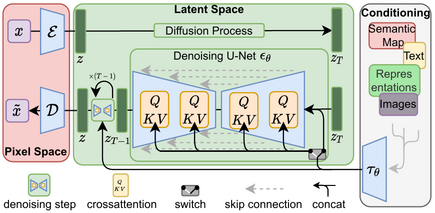

Unveiling Diffusion Models: From Denoising to Generative Art The field of generative modeling has witnessed remarkable advancements over the past few years, with diffusion models emerging as a powerful class capable of generating high-quality, diverse images and other data types. Rooted in concepts from thermodynamics and stochastic processes, diffusion models have not only matched but, in some aspects, surpassed the performance of traditional generative models like Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs). In this blog post, we’ll delve deep into the evolution of diffusion models, understand their underlying mechanisms, and explore their wide-ranging applications and future prospects. Table of Contents Introduction to Diffusion Models Historical Development Understanding Diffusion Models The Forward Diffusion Process (Noising) The Reverse Diffusion Process (Denoising) Training Objective Variance Scheduling Model Architecture Implementing Diffusion Models Applications of Diffusion Models Advancements: Latent Diffusion Models and Beyond Challenges and Limitations Future Directions Conclusion References Additional Resources Introduction to Diffusion Models Diffusion models are a class of probabilistic generative models that learn data distributions by modeling the gradual corruption and subsequent recovery of data through a Markov chain of diffusion steps. The core idea is to learn how to reverse a predefined noising process that progressively adds noise…

Breaking Down Diffusion Models in Deep Learning – Day 75