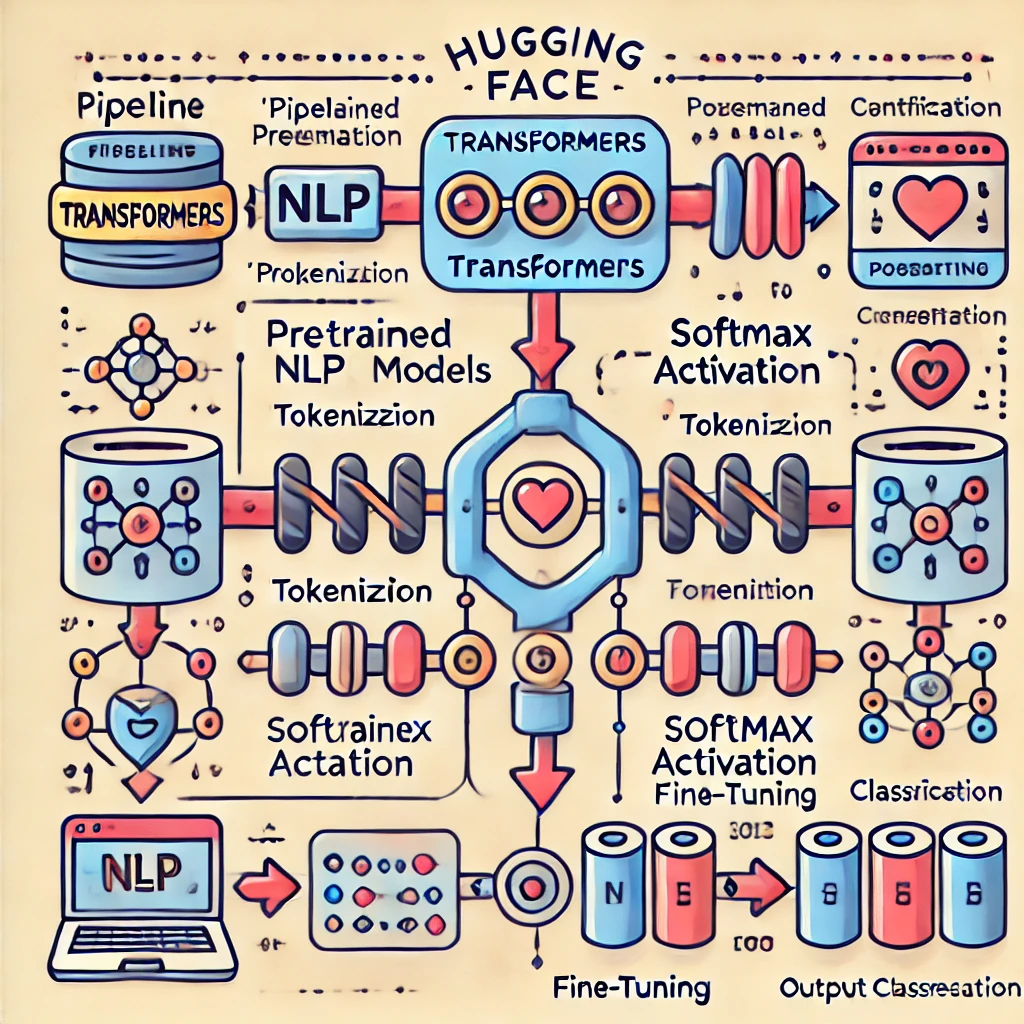

The Rise of Transformers in Vision and Multimodal Models In this first part of our blog series, we’ll explore how transformers, originally created for Natural Language Processing (NLP), have expanded into Computer Vision (CV) and even multimodal tasks, handling text, images, and video in a unified way. This will set the stage for Part 2, where we will dive into using Hugging Face and code examples for practical implementations. 1. The Journey of Transformers from NLP to Vision The introduction of transformers in 2017 revolutionized NLP, but researchers soon realized their potential for tasks beyond just text. Originally used alongside Convolutional Neural Networks (CNNs), transformers were able to handle image captioning tasks by replacing older architectures like Recurrent Neural Networks (RNNs). How Transformers Replace RNNs Transformers replaced RNNs due to their ability to capture long-term dependencies and work in parallel rather than sequentially, like RNNs. This made transformers faster and more efficient, especially for image-based tasks where multiple features needed to be processed simultaneously. 2. The Emergence of Vision Transformers (ViT) In 2020, researchers at Google proposed a completely transformer-based model for vision tasks, named the Vision Transformer (ViT). ViT treats an image in a way similar to text data—by splitting it into smaller image patches and feeding these patches into a transformer model. How ViT Works: Splitting Images into Patches: Instead of feeding an entire image into a CNN, the ViT divides an image into 16×16 pixel patches. Embedding Patches: Each patch is flattened into a vector, which is then treated like a word in a sentence for the transformer model. Processing Through Self-Attention: The transformer processes these patch vectors through a self-attention mechanism, which looks at the relationships between all patches simultaneously. Feature CNN Vision Transformer (ViT) Input Entire image (filtered) Image patches Processing Style Local (focus on specific parts) Global (entire image at once) Inductive Bias Strong (assumes local relationships) Weak (learns global relationships) Best Use Cases Small to medium datasets Large datasets, such as ImageNet Inductive Bias in CNNs vs. Transformers CNNs assume that pixels close to each other are related, which is called inductive bias. This makes CNNs very good at image recognition tasks. Transformers don’t make these assumptions, allowing them to capture long-range dependencies better, but they need more data to do this effectively. 3. Multimodal Transformers: Perceiver and GATO The power of transformers in processing sequences has inspired the development of multimodal models like Perceiver and GATO. These models can handle text, images, video, and even audio in one go. Perceiver: Efficient Multimodal Transformer Perceiver, introduced by DeepMind in 2021, can process various types of input by converting them into a compressed latent representation. The Perceiver model is much more efficient when processing long sequences of data, which makes it scalable for multimodal tasks. Model Modality Support Key Features Perceiver Text, Images, Video, Audio Latent representations, scalable attention GATO Text, Images, Atari games, etc. Handles multiple task types 4. Advanced Multimodal Models: Flamingo and GATO Flamingo and GATO, both introduced by DeepMind in 2022, represent a significant leap forward in multimodal models. Flamingo: Capable of handling text, images, and video, Flamingo is pre-trained across multiple modalities to work on tasks like question answering and image captioning. GATO: A versatile transformer model that can be applied to a variety of tasks, including playing Atari games, handling text input, and image recognition. GATO integrates several capabilities into one unified model. Model Task Capabilities Special Features Flamingo Question answering, captioning Trained on multiple modalities simultaneously GATO Image classification, game playing Unified model for different types of tasks Multimodal AI has advanced significantly, with models evolving to handle text, images, audio, and video seamlessly. Early breakthroughs like Flamingo and GATO set the foundation, followed by Gemini models enhancing long-context understanding and multimodal output. GPT-4o improved real-time interactions across multiple modalities, while Nvidia’s Cosmos advanced video generation and robotics training. Meanwhile, DeepSeek emerged as a strong competitor, introducing Janus Pro for image generation and R1, an open-source reasoning model, challenging existing AI leaders. These advancements have reshaped industries, leading to increased integration in smart wearables, autonomous systems, and decision-making AI. With companies rapidly innovating and competing, multimodal AI continues to improve year by year, unlocking more sophisticated and efficient applications across various domains. 2. Video Generation and Diffusion Models Companies like Haiper are advancing with models based on DiT (Diffusion and Transformer) architectures. Haiper 2.0allows users to generate ultra-realistic videos from prompts, combining diffusion models with Transformer componentsto increase speed and efficiency. This breakthrough has implications for video generation and creative content industries. 3. Robotics and Transformer Efficiency In robotics, Google’s SARA-RT system is refining Transformer models used for robotic tasks, making them fasterand more efficient. This leads to improved real-time decision-making in robots, critical for practical applicationssuch as autonomous driving and general real-world robotics tasks. 4. New Releases of LLMs OpenAI and Meta have been at the forefront of developing large language models (LLMs), continually pushing the boundaries of natural language processing. OpenAI’s GPT-5 Development OpenAI has been working on GPT-5, aiming to enhance reasoning capabilities and address limitations observed in previous models. However, the development has faced challenges, including delays and substantial costs, leading to an anticipated release in early 2025. wsj.com Meta’s Llama 3 Series Meta has made significant strides with its Llama series, culminating in the release of Llama 3.1. This model boasts 405 billion parameters, supporting multiple languages and demonstrating notable improvements in coding and complex mathematics. Despite its size, Llama 3.1 competes closely with other leading models in performance. reuters.com These developments underscore the rapid evolution of LLMs, with each iteration bringing enhanced capabilities and performance, thereby intensifying the competitive landscape in AI research and application. These were some examples of Development on 2025 to see the improvement of AI through some years but each year AI is improving so fast. Hugging Face Transformers – A Step-by-Step Guide with Code and Explanations In this part of the blog post, I will guide you through using Hugging Face’s Transformers library, explaining what each code block does, why you need it, and how it works in real-world scenarios. This way, you won’t just copy and paste code—you’ll understand its purpose and how to use it effectively. 1. Getting Started with Hugging Face Pipelines The easiest way to start with Hugging Face is by using the pipeline() function. A pipeline is a high-level abstraction that allows you to quickly run pretrained models for various tasks such as sentiment analysis, text generation, or text classification. Why Use a Pipeline? Pipelines are great when you want to solve a problem quickly without worrying about the…

The Rise of Transformers in Vision and Multimodal Models – Hugging Face – day 72