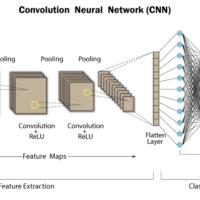

Understanding Transfer Learning in Deep Neural Networks Understanding Transfer Learning in Deep Neural Networks: A Step-by-Step GuideIn the realm of deep learning, transfer learning has become a powerful technique for leveraging pre-trained models to tackle new but related tasks. This approach not only reduces the time and computational resources required to train models from scratch but also often leads to better performance due to the reuse of already-learned features. What is Transfer Learning?Transfer learning is a machine learning technique where a model developed for one task is reused as the starting point for a model on a second, similar task. For example, a model trained to recognize cars can be repurposed to recognize trucks, with some adjustments. This approach is particularly useful when you have a large, complex model that has been trained on a vast dataset, and you want to apply it to a smaller, related dataset without starting the learning process from scratch. Key Components of Transfer LearningIn transfer learning, there are several key components to understand: Base Model: This is the pre-trained model that was initially developed for a different task. It has already learned various features from a large dataset and can provide a strong starting point for the new task. New Model: This is the model you want to train for your new task. It will incorporate the layers or features from the base model but will also have new layers added or some layers adjusted to fit the new task requirements. Frozen Layers: When reusing layers from the base model, these layers can be “frozen,” meaning their weights will not be updated during training on the new task. This allows the model to retain the valuable features learned from the original task. Trainable Layers: These are the new or adjusted layers in the new model that will be trained on the new dataset. By fine-tuning these layers, the model can adapt to the specific needs of the new task. How Does Transfer Learning Work?Imagine you have a deep neural network that was trained to classify images of animals into categories like dogs, cats, and birds. Now, you want to adapt this model to classify a new set of images, say, different species of dogs. The process of transfer learning might look something…