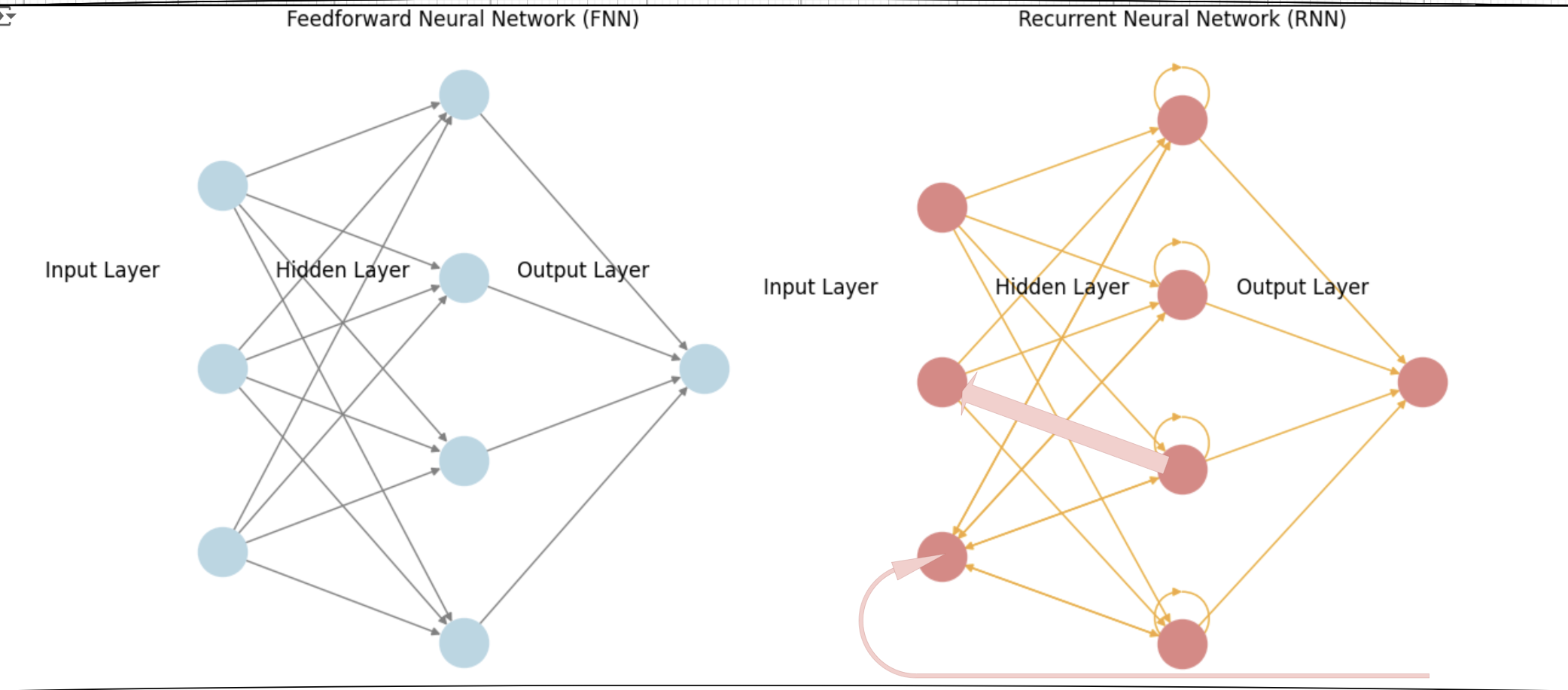

In this article we try to show an example of FNN and for RNN TO understand the math behind it better by comparing to each other:

Neural Networks Example

Example Setup

- Input for FNN:

- Target Output for FNN:

- RNNs are tailored for sequential data because they are designed to remember and utilize information from previous inputs in a sequence, allowing them to capture temporal relationships and context effectively. This characteristic differentiates RNNs from other neural network types that are not inherently sequence-aware., Input for RNN (Sequence):

![Rendered by QuickLaTeX.com X = [0.5, 0.7]](https://i0.wp.com/ingoampt.com/wp-content/ql-cache/quicklatex.com-b51822a0e6b36a662b36ddd146dac9db_l3.png?resize=101%2C18&ssl=1)

- Target Output for RNN (Sequence):

![Rendered by QuickLaTeX.com Y = [0.8, 0.9]](https://i0.wp.com/ingoampt.com/wp-content/ql-cache/quicklatex.com-bce02e5b05d3f3038794e7a2db2969a8_l3.png?resize=99%2C18&ssl=1)

- Learning Rate:

1. Feedforward Neural Network (FNN)

Structure

- Input Layer: 1 neuron

- Hidden Layer: 1 neuron

- Output Layer: 1 neuron

Weights and Biases

- Initial Weights:

(Input to Hidden weight)

(Input to Hidden weight) (Hidden to Output weight)

(Hidden to Output weight)

- Biases:

(Hidden layer bias)

(Hidden layer bias) (Output layer bias)

(Output layer bias)

Step-by-Step Calculation for FNN

Step 1: Forward Pass

- Hidden Layer Output:

- Output:

Step 2: Loss Calculation

Using Mean Squared Error (MSE):

![]()

Step 3: Backward Pass

- Gradient of Loss with respect to Output:

- Gradient of Output with respect to Hidden Layer:

- Gradient of Hidden Layer Output with respect to Weights:

Assuming

:

:

Step 4: Weight Update

- Update Output Weight:

- Update Input Weight:

2. Recurrent Neural Network (RNN)

Structure

- Input Layer: 1 neuron

- Hidden Layer: 1 neuron

- Output Layer: 1 neuron

Weights and Biases

- Initial Weights:

(Input to Hidden weight)

(Input to Hidden weight) (Hidden to Hidden weight)

(Hidden to Hidden weight) (Hidden to Output weight)

(Hidden to Output weight)

- Biases:

(Hidden layer bias)

(Hidden layer bias) (Output layer bias)

(Output layer bias)

NOW Lets check Step-by-Step example Calculation for RNN

Step 1: Forward Pass

Assuming initial hidden state ![]() . This is where the memory concept starts; the hidden state retains information from previous time steps.

. This is where the memory concept starts; the hidden state retains information from previous time steps.

- For

(Input

(Input  ):

):

- Hidden State:

Here,

is influenced by the previous hidden state

is influenced by the previous hidden state  (which is 0). This demonstrates how the RNN maintains memory; the hidden state captures the relevant information to influence future computations.

(which is 0). This demonstrates how the RNN maintains memory; the hidden state captures the relevant information to influence future computations. - Output:

- Hidden State:

- For

(Input

(Input  ):

):

- Hidden State:

In this step,

is influenced by both the current input

is influenced by both the current input  and the previous hidden state

and the previous hidden state  . This reflects the memory of the previous input and its influence on the current state.

. This reflects the memory of the previous input and its influence on the current state. - Output:

- Hidden State:

Step 2: Loss Calculation

Using Mean Squared Error (MSE) for the sequence:

- For

:

:

- For

:

:

Total Loss:

![]()

Step 3: Backward Pass (BPTT)

This is where backpropagation through time takes place. The gradients are computed considering how each hidden state affects the output across the entire sequence.

- Gradient of Loss w.r.t Output:

- For

:

:

- For

:

:

- For

- Gradient of Output w.r.t Hidden:

- Gradient of Hidden States:

- For

:

:

- For

:

:

- Memory Influence: The hidden state

depends on

depends on  and the current input

and the current input  . Thus, the gradients also account for the memory stored in previous hidden states.

. Thus, the gradients also account for the memory stored in previous hidden states.

- For

- Gradient for Weights:

- For

:

:

- For

:

:

- For

Step 4: Weight Update

- Update Weights:

- For

:

:

- For

:

:

- For

Summary Table

Feedforward Neural Network (FNN)

| Step | Calculation |

|---|---|

| Forward Pass | |

| Loss | |

| Gradient (Output) | |

| Weight Update |

Recurrent Neural Network (RNN)

| Step | Calculation | Explanation |

|---|---|---|

| Forward Pass | Hidden state |

|

| Hidden state |

||

| Loss | Total loss calculated across the sequence. | |

| Gradient (Output) | For |

Gradients computed for each time step output. |

| Weight Update | Weights updated based on contributions from all previous states. |

Key Takeaways

- FNN: Each input is treated independently, and the backpropagation process is straightforward.

- RNN: The model retains memory of previous states through the hidden state, making the calculations for gradients more complex, especially during backpropagation through time (BPTT). Each hidden state influences subsequent outputs and reflects the model’s ability to remember past inputs.

Another concept to understand here is, memorization in RNNs happens through the hidden states. Each hidden state (h_t) carries information from previous inputs:

- At time step

, the hidden state

, the hidden state  is influenced by the initial hidden state h_0 (which is 0).

is influenced by the initial hidden state h_0 (which is 0). - At time step

, the hidden state

, the hidden state  is influenced by both the current input

is influenced by both the current input  and the previous hidden state

and the previous hidden state  .

.

Our brief example here was to explain the math behind Feedforward Neural Networks (FNNs) and Recurrent Neural Networks (RNNs) in a simple way, by highlighting their mathematical differences you can understand each model better.

Don’t forget to check our apps! Visit here.