First check our previous article about what is Diffusion Model before reading this article

How DALL-E Works: A Comprehensive Guide to Text-to-Image Generation

DALL-E, developed by OpenAI, is a revolutionary model that translates text prompts into detailed images using a complex, layered architecture. The recent 2024 update to DALL-E introduces enhanced capabilities, like detailed image fidelity, prompt-specific adjustments, and even a system to identify AI-generated images. This post will explore DALL-E’s architecture and workflow with up-to-date tables, figures, and flowcharts to simplify the technical aspects.

1. Core Components of DALL-E

DALL-E integrates multiple components to process text and generate images. Each part has a unique role, as shown in Table 1.

| Component | Purpose | Description |

|---|---|---|

| Transformer | Text understanding | Converts the text prompt into a numerical embedding, capturing the meaning and context. |

| Multimodal Transformer | Mapping text to image space | Transforms the text embedding into a visual representation, guiding the image’s layout and high-level features. |

| Diffusion Model | Image generation | Uses iterative denoising to convert random noise into an image that aligns with the prompt’s visual features. |

| Attention Mechanisms | Focus on image details | Enhances fine details like textures, edges, and lighting by focusing on specific image areas during the generation. |

| Classifier-Free Guidance | Prompt fidelity | Ensures adherence to the prompt by adjusting the influence of text conditions on the generated image. |

New 2024 Updates: DALL-E 3, the latest version, features improved text and visual representation alignment, enabling more accurate and intricate image generation. It can now handle complex details such as hands, faces, and embedded text in images, providing users with sharper, more lifelike results.

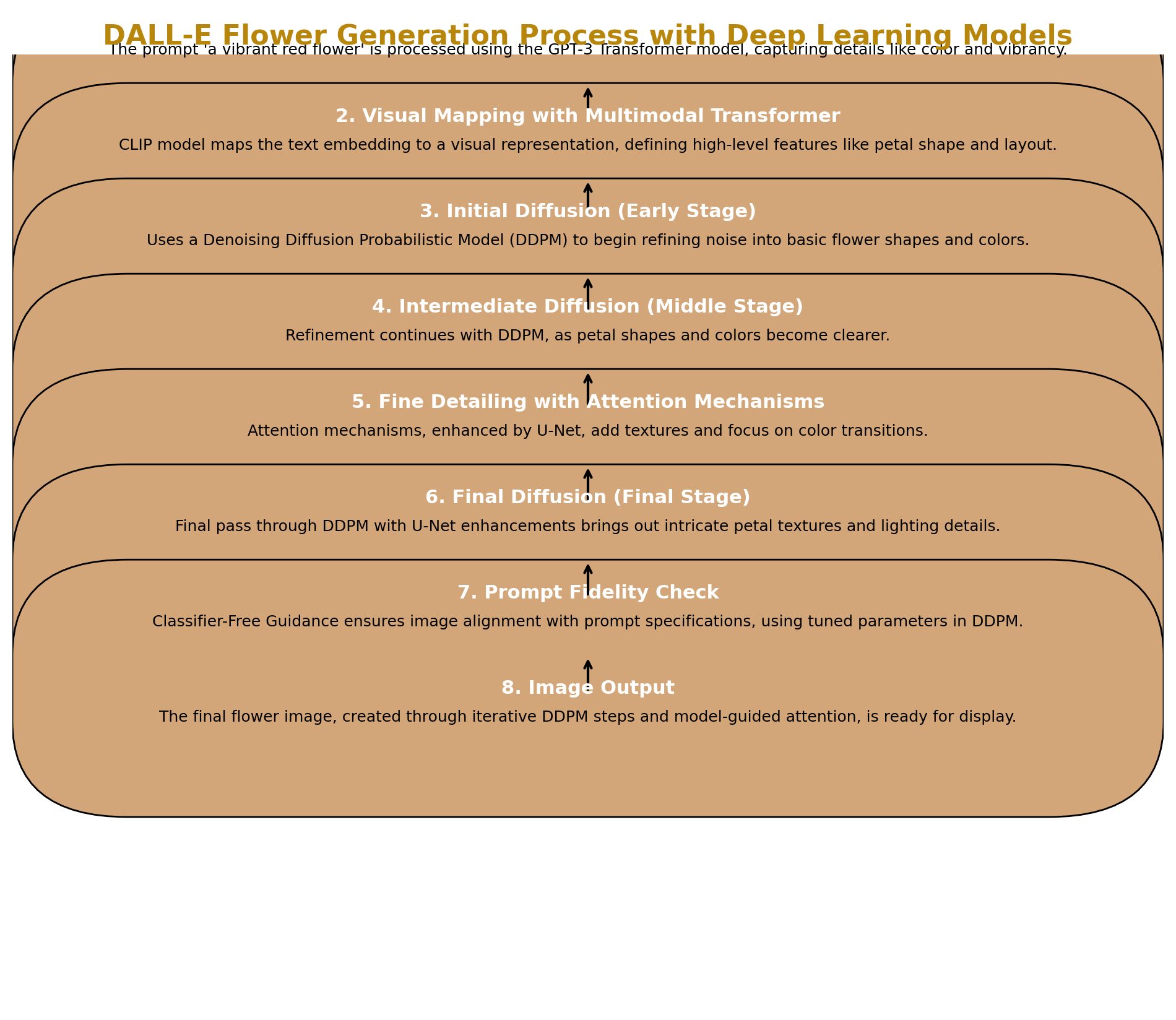

2. Step-by-Step Workflow of DALL-E

DALL-E’s workflow translates a text prompt into an image through multiple stages, as illustrated below.

1. Interpreting the Prompt with Transformers: Text prompt is embedded using transformers, capturing context and meaning as a base for the image.

2. Mapping Text to Visual Space: Embedding maps to visual space, defining high-level features.

3. Image Generation through Diffusion: Iterative denoising brings the image closer to the prompt.

4. Enhancing Detail with Attention Mechanisms: Focuses on textures and spatial details for enhanced realism.

5. Ensuring Prompt Fidelity with Classifier-Free Guidance: Balances prompt influence for alignment with user descriptions.

3. Denoising Stages: A Visual Breakdown

Denoising is central to DALL-E’s generation process, evolving from random noise into a coherent image.

Early Stage: Shapes and colors emerge.

Middle Stage: Structure becomes visible.

Final Stage: Lighting, texture, and intricate edges finalize the image.

4. 2024 Enhancements: Efficiency, Control, and Fidelity

Recent updates in 2024 have introduced several advancements that increase DALL-E’s usability and control.

| Feature | Description | Benefit |

|---|---|---|

| Enhanced Text Embedding | Captures specific details from prompts for nuanced images. | Higher prompt fidelity. |

| Provenance Classifier | Identifies AI-generated images with high accuracy, helping verify authenticity. | Supports responsible AI usage. |

| Improved Safety Protocols | Filters styles of living artists and public figures to avoid misuse. | Enhances ethical use and respects IP rights. |

| Interactive Prompt Adjustments | Integrated with ChatGPT, enabling prompt modifications in real-time. | Allows easier image fine-tuning. |

5. Applications and Use Cases

DALL-E’s advancements open up numerous applications across fields.

| Application Area | Description | Example Use Case |

|---|---|---|

| Art and Design | Generates artwork and illustrations based on descriptive prompts. | Creating unique digital art pieces. |

| Scientific Visualization | Produces educational visuals based on complex scientific concepts. | Illustrating biological processes or astronomical phenomena. |

| Marketing and Media | Creates engaging visuals tailored to specific marketing needs. | Designing custom images for ad campaigns. |

| Interdisciplinary Research | Transforms complex data into visual formats for better understanding. | Visualizing data trends in research papers. |

Conclusion

DALL-E’s architecture, bolstered by transformers, diffusion processes, and new 2024 enhancements, brings text prompts to life in visually compelling images. With features like enhanced fidelity, real-time prompt editing, and robust content verification, DALL-E continues to lead the field of text-to-image generation.

For more information, visit OpenAI’s DALL-E documentation and system card.